Introduction

Cloud. Automation. Analytics. With the rapid influx of tech developments and trends that affect business, organizations are doing everything they can to embrace digital transformation to meet the demands of a fast-changing business landscape and new regulatory standards.

Unfortunately, the focus remains on superficial digital transformation aspects: New customer service tools. New automation tools for work processes. Fancy mobile apps and web interfaces. It is only after the initial excitement dies down that companies realize these digital initiatives did little to actually transform their business.

Digital transformation isn’t just about experimenting with technology – it’s about rebooting the organization’s systems and transforming the business itself. But as companies implement digital transformation initiatives, they come to the realization that they cannot let go of legacy systems.

As if legacy systems were not enough pain, companies also begin to realize they have poor data quality. They cannot replace legacy systems or migrate from an old application to a new CRM until they have clean master data. When companies are hit with this realization, they either act fast to get it sorted or keep the whole transformation idea on the back burner.

Working with private and government organizations for nearly two decades, we’ve seen how poor master data quality residing in outdated legacy systems can potentially destroy a business. Loss of revenue and business credibility, employee fatigue, talent drain, and stressed management are all problems that can drag a business down.

This whitepaper is an attempt at finding the middle-ground solution – giving up a legacy system and improving master data quality is a huge and expensive undertaking, one that doesn’t happen on a whim. Keeping this limitation in mind, there ought to be a middle-ground solution, one that enables a business to optimize its data while making the gradual move from legacy to modern.

Why Legacy Systems are Still Being Used in Organizations

Legacy systems refer to any computer system, programming language, application, or process that can no longer receive support and maintenance; however, since they’ve been woven into the very foundation of the organization, they are not easily replaced or updated for several reasons. While the system may still be functioning well, it does not support growth. As an example, a legacy CRM may not offer integrations with third-party marketing platforms or tools like Google or Facebook.

As technology advances, most organizations find themselves dealing with the oddities of a legacy system. Even with updates, some systems keep the company in a rut, preventing growth or scaling opportunities.

Despite being a bottle-neck, legacy systems are still being used in organizations. There are several varying reasons for this:

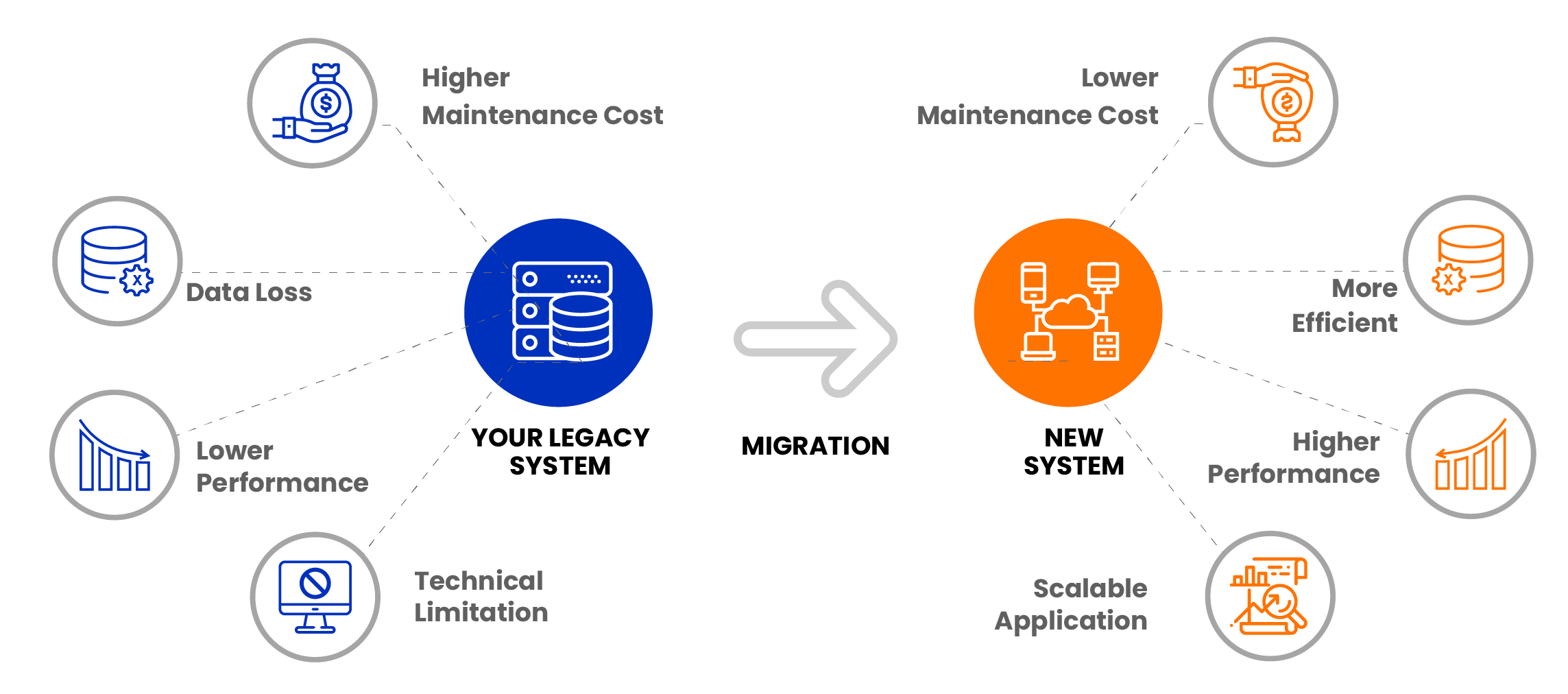

What are the Risks Associated with Legacy Systems?

Although legacy systems are integral to business operations, they can cause a myriad of problems, including the most critical issue – exorbitant maintenance and update costs. As much as organizations are afraid of the up-front costs of upgrading, they are paying even more than the cost of maintenance. The main difference? That cost is a gradual expense that does not alter the functionality of the business.

64% of public sector IT spend is consumed by legacy IT.

Global Data,

Open Text Innovation Tour

A legacy system may offer stability, reliability, and familiarity, but in a rapidly-changing digital landscape, these systems may become a costly liability. The issues associated with legacy systems far outweigh the convenience of continuing to use them.

Some of the most critical problems are:

There is only so much you can do in a legacy system. It’s like forcing an old horse to win a race you may make it run, but you can never make it outperform other horses.

Don’t Let Lagging Legacy Systems Affect Your Business

Additional risks we’ve seen some of our clients suffer from include:

- Unstable and often incompatible with new OS, browsers, or other applications.

- Companies may stall upgrades but they always have to keep improving one component or the other.

- Sometimes fixing one component causes other components to malfunction.

- The more patches these systems have, the more susceptible they are to security issues.

- As the years go by, performance will be slower, failure will be more frequent and efficiency will take a big hit. Considering the pace at which technology is moving, legacy systems will fail in the next five to ten years.

- Talent with legacy system knowledge and expertise will no longer be available to manage and sustain the system.

- Data from legacy systems will no longer be reliable or good enough for business intelligence analysis.

So while organizations may stall an upgrade of their legacy system or a critical migration, they will have to realize that eventually, the risks with outdated systems far outweigh the current benefits of using them

Legacy Systems and Poor Data Quality Hindering Digital Transformation

Master data is an organization’s raw data that remains unchanged over a period of time. Every organization will have master data on its products, customers, finances, operations, processes, and other operational areas. This data, while previously ignored in the depths of oblivion has immense value in today’s age. This data plays a crucial role in a digital transformation strategy – it is only unfortunate that master data in most organizations is not robust, reliable, or up-to-date.

It seems obvious that companies should already be ramping up their data management game plan if they haven’t yet done so in 2020. But while companies invested in fancy technologies, they neglected to upgrade their data management processes, causing their data to become a significant roadblock in any digital transformation initiative they try to take on.

Data is central to the operation of a business, however, in today’s digitally empowered world, data is more than just a component of a business. It is the lifeline of a business. The quality of the underlying data is paramount in ensuring the making of correct business decisions.

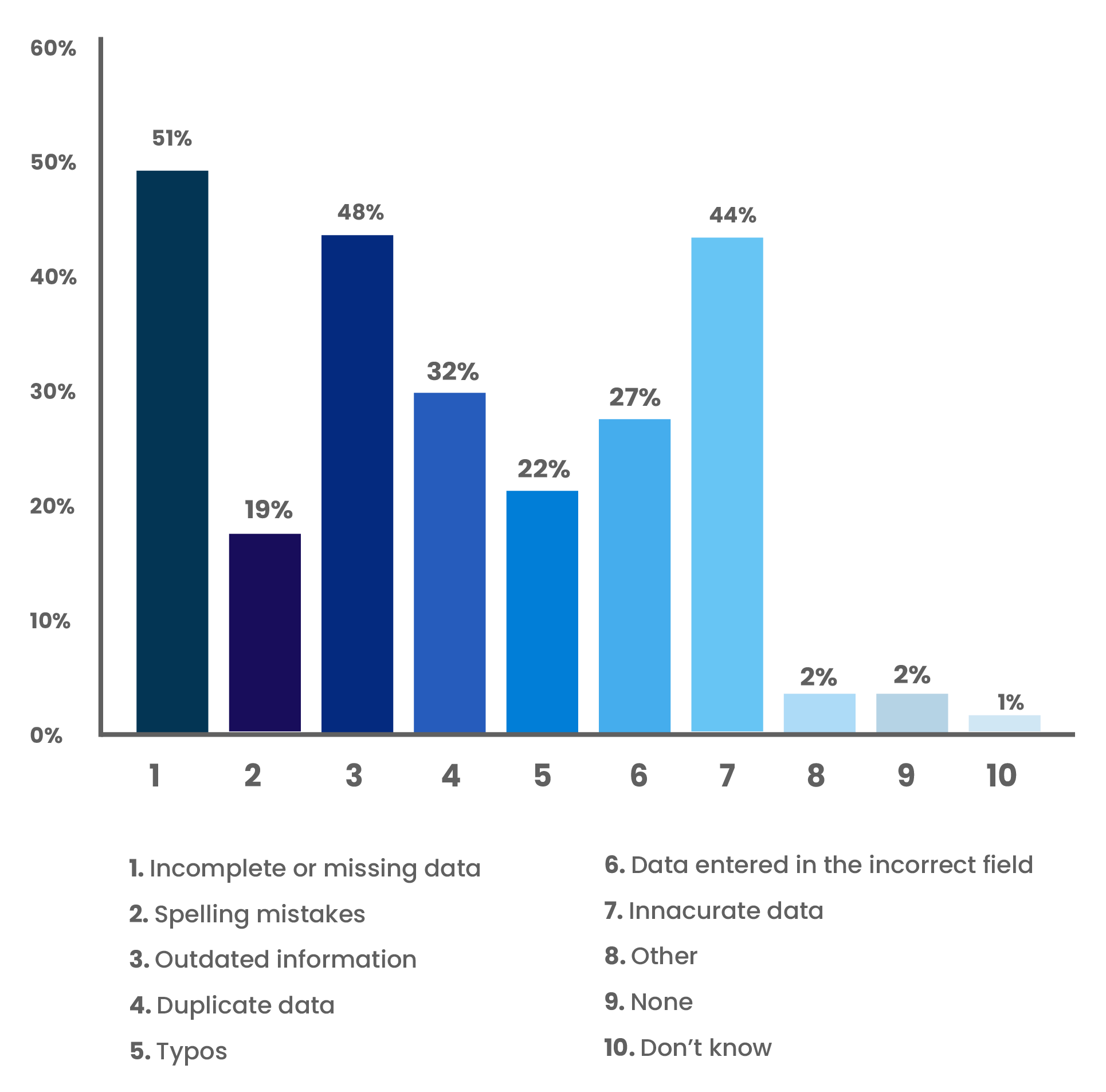

But what do we specifically mean by poor data quality? And how does a legacy system affect data quality?

Here’s what we found out after years of working with our clients.

Understanding Poor Data Quality

When we talk about data quality, we’re talking about the accuracy, consistency, reliability, usefulness, and completeness of data. Poor data quality refers to data that is incorrect, invalid, irrelevant, and outdated.

Examples of Good Data Quality:

Accurate shipping address

Complete vendor information

Correct contact information including rightly spelled names, email addresses etc.

Consistent standards (first letters of names capitalized, no abbreviations, etc) across different data silos.

Organized information where customer info can easily be accessed by any department

Reason for Maintaining High Quality Data

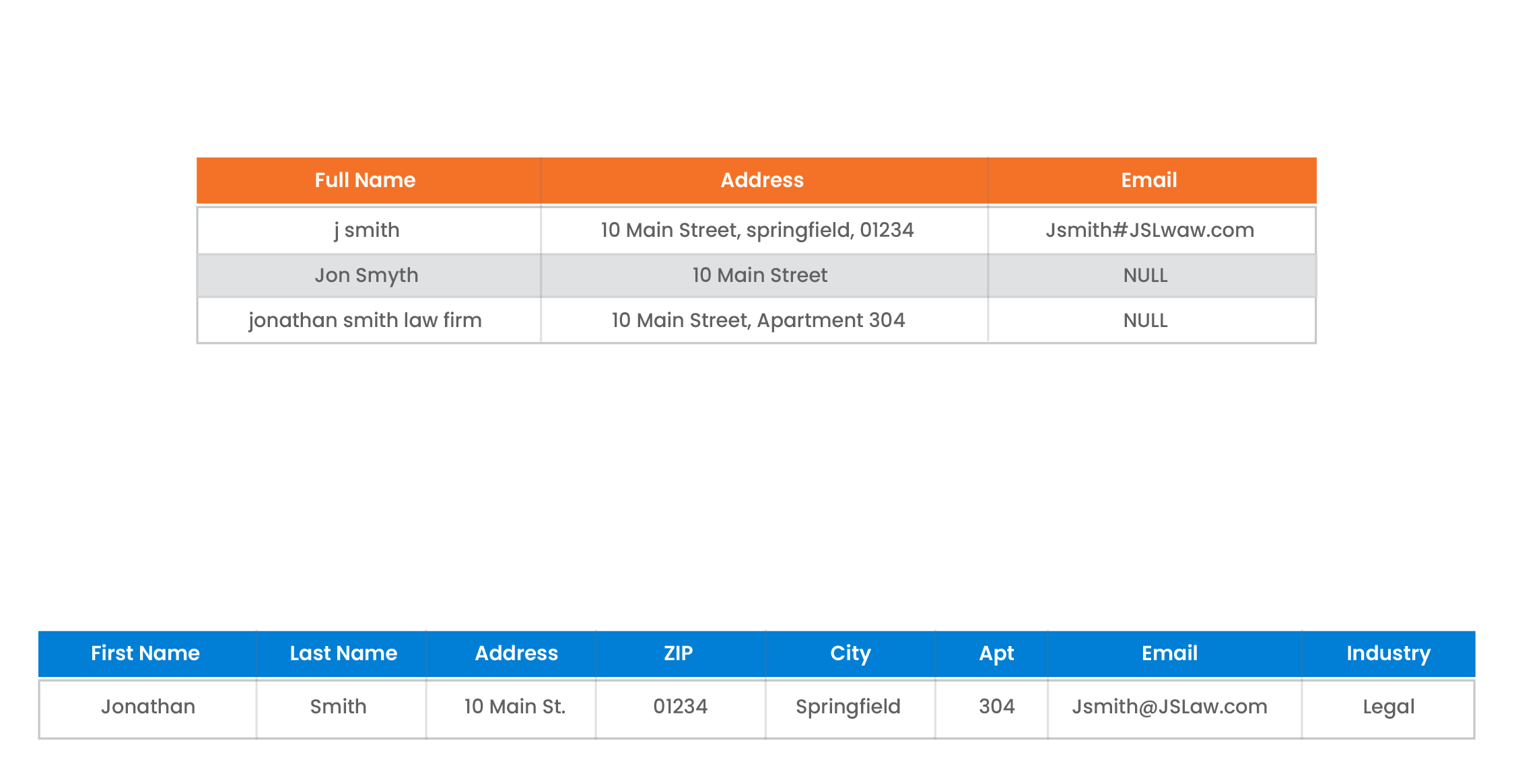

Examples of BAD Data Quality:

Incomplete addresses such as missing zip codes, missing city codes for phone numbers.

Mangled data where one record does not represent one entity but different entities.

Data that has no unified meaning and can easily be misinterpreted.

Data that is unstructured or corrupt but is still occupying space in databases.

Data that is duplicated and redundant found in multiple systems.

Although these examples may sound pretty basic, in the long run, they become a nightmare for IT teams and data analysts hired to optimize or upgrade the company’s digital infrastructure.

In fact, when data is migrated from one existing database to another, it presents a host of quality issues.

Let’s see some situations where data quality can be a significant roadblock to a transformation plan.

Situations that Create or Exacerbate Data Quality Issues

Data Migration Process: If data was manually entered into the legacy system and remained unchecked for years, the migration process from the legacy system to the new database could be a potential disaster. Data migration introduces serious errors that, coupled with any previous, unresolved error, could render the data useless. In cases where the legacy system’s metadata is out-of-sync with the actual schema, it can lead to serious problems in data quality.

Consolidating ERPs or Systems: Often when business mergers or acquisitions happen, data consolidation is part of the process. If the acquired business had outdated data and it was not corrected before the consolidation process, the business itself could suffer from adverse setbacks. Merging databases with differences in data and compatible fields may also impact data accuracy.

Manual Data Entry: Humans are still significantly involved in the data entry process. With human involvement comes the chances of human error. Since legacy systems usually have humans entering data manually, there are high chances of incomplete, inaccurate, or duplicated data. End-users may be filling in shortcut information while data operators may make assumptions of this information and proceed to enter incorrect values.

During a Batch Processing Process: There may be situations where organizations automate bulk processing of volumes of data. For example, importing data between third-party CRMs is commonly done in batches to save time and effort. During this time, the system may push large amounts of wrong or duplicated data as well. This leads to severe issues of duplicated data that may result in incorrect analysis and miscalculated decisions.

Data Manipulations: System upgrades, database redesigns, and other such activities may result in data issues or worse data deterioration if prior planning or backup was not implemented. These processes may also cause junk data to reside in databases which may eventually cause data quality issues.

There are many other instances where processes and operational changes within an organization can have a direct or indirect impact on data quality. With organizations focusing on the automation of internal processes, high volumes of data are at risk of being either neglected or mishandled by team members who may not necessarily be data experts.

In conclusion, data quality may be lost due to processes that bring data into the system, or by processes that seek to enhance existing systems in the organization.

Successful digital transformations, therefore, rely on replacing existing legacy systems, provided it is done with a data management plan in place. As obvious as it is, only a few organizations have a data strategy in place when embarking on an ambitious digital transformation initiative.

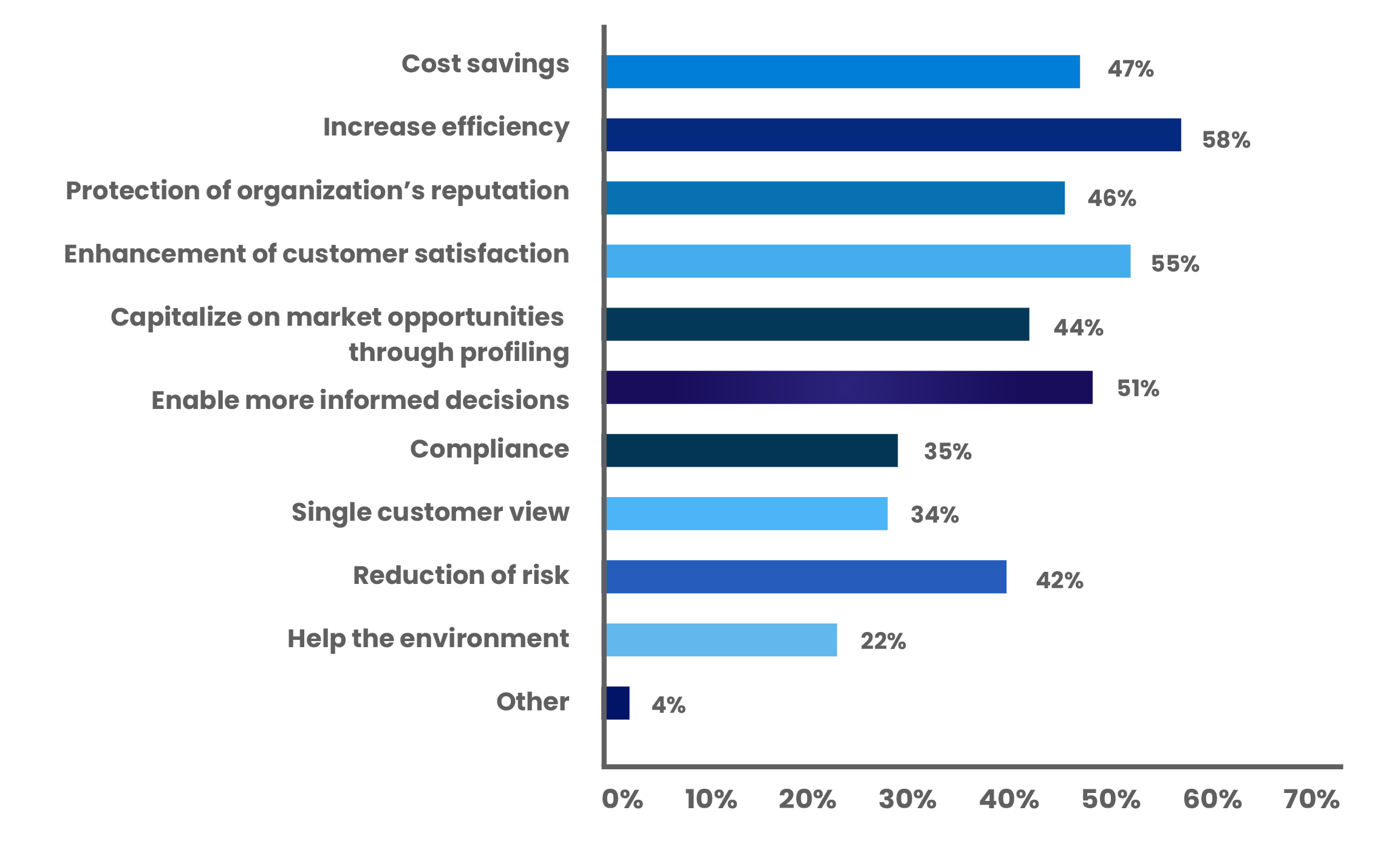

Needless to say, data transformation is the first step to a successful digital transformation, but for data transformation to happen, it’s necessary for the organization to set clear goals and vision for the value it expects both transformations to create.

Organizations need to identify the obstacles that are holding them back – from the lack of front-office controls that are the root cause of poor data input to inefficient management of data within multiple legacy IT systems, to a lack of business support and technical talent and finally the unclear goals and ambitions that stall a data & digital transformation initiative.

Solution – What Can Be Done to Improve Data Quality & Update Legacy Systems While Keeping in Mind Existing Challenges?

Digital transformation is not something you can achieve in a month or a year. It takes months and years of planning ahead, assessing risks and challenges, and trying to find a middle-ground. Go too fast and you disrupt a functional business – go too slow and you lose out on growth, opportunities & business value.

So let’s boil it down to the basics.

Data Strategy as the Key to Updating Legacy Systems

Consider digital transformation as the ultimate destination. To get there, legacy systems have to be updated. To update legacy systems, one has to start from data.

Therefore, creating a data transformation strategy is the key to updating legacy systems and ensuring a successful digital transformation.

Here’s what can be done at the most basic level:

- Extract Data from the System: This might sound rudimentary, but even at an enterprise level, data must be safely extracted from the system and backed up before any other processes occur. Migrating data out of a legacy system starts with ensuring it can be extracted safely.

- Mapping the Data: When migrating to a new system, old data needs to be mapped onto the new system. The mapping process is to analyze whether the data in the old system is compatible or can be mapped to the new system. This step is important for the new system to understand data from the legacy system.

- Cleanse & Optimize Data: Data from a legacy system are bound to have quality issues. Before mapping or moving to a new system, old data must be cleansed and rid of duplications, incomplete data, and unstructured data. For example, a legacy system with dashes in phone numbers will not work with a new system that doesn’t allow or use dashes.

- Cleanse & Optimize Data: Once data is cleansed, a sample set of data is imported into the new system to test for potential problems and errors. This weeds out potential issues before the new system goes live. The validation and standardization procedures ensure that old data is standardized according to the new system’s features.

- Cleanse & Optimize Data: It is here that staff training and front-end data input procedures must be conducted. Often organizations end up committing the same mistakes with the new organization by overlooking the human factor in data input. For example, addresses must be written in its full form. Names must not be abbreviated and so on. Set standards across the organization ensures everyone’s on board with best practices. While this doesn’t completely prevent errors, it does give employees much-needed awareness.

Once data has been cleansed, standardized, and stored in a centralized system, it becomes easier to manage and analyze. A robust backup and recovery plan, a scalability plan in terms of performance and capacity, a storage location plan must all be part of this data strategy.

“The Data Journey: It’s only the beginning for digital transformation.”

– Doug Cutting | Chief Architect

Creating a Single Customer View to Optimize Business Processes

The whole purpose of digital transformation is to meet customer expectations and enhance customer experience.

“56% of CEOs say digital improvements have led to increased revenue.”

– Gartner Survey

But digital improvements are not a shot in the dark. Companies have to get accurate insight from their customer data to know what to improve. This insight can only be obtained if the data is reliable and can provide a unified, single-customer view.

A single customer view is a holistic, consolidated, consistent representation of an organization’s customer data. Companies may not need to make drastic overhauls to their data or digital platforms to achieve a single customer view. Instead, they can make small steps to clean, dedupe, match, and consolidate customer data held in more than one system – resolving customer entity discrepancies both within and between systems.

“More than 400 customer experience executives were surveyed, and achieving a single customer view came out as one of the top challenges.”

– Harvard Business Review Study

Sales, marketing, billing, shipping, and customer service are some of the core departments that share the same customer info. If these departments are each using their own CRM or data input methods, the same data is being recorded, used, and distributed in multiple ways.

This means a company could potentially have dozens of records across data sources for each customer. Some records may contain their buying preferences, some may have demographic data, others may have more complete contact data, etc. And in each of these records, the key identifier is a little different. Perhaps the customer used a nickname when filling out a loyalty rewards program card, or they could’ve used their email address when signing up on the website. Regardless of the reason, there is now a variety of representations for each customer stored across a variety of systems.

Take for example the case of a retail food distributor that does not have a unified source of data. Their

data problems:

- The [phone] token has missing city codes in the number.

- The [address] field has missing zip codes.

- The [name] token has typos, abbreviations, nicknames, fake names, missing first/last names, and son.

- Spellings are inaccurate and contact info has been updated multiple times by different people across different departments.

When executives want to analyze their data to make a key business expansion decision, they can’t seem to get reliable statistics from their data.

Billing data gives a different story than customer service data. Feedback and reviews from customers are random and inconsistent.

External stakeholders or third-party distributors send in their data which doesn’t map with the retailer’s existing data pattern. Because retailers send local information, they don’t record phone numbers with city codes.

When marketing obtains this information, they are dealing with broken email records, missing phone numbers, typos in spelling, and much more.

Data duplication and data inconsistency spell disaster for this company.

How Do You Obtain a Single Customer View?

Now that we have a better idea of what a Single Customer View is and how it’s a primary challenge that can be resolved, let’s take a look at the capabilities necessary for creating a single view: Integration, Profiling, Cleansing, and Matching.

Data Integration:

The first step is obviously to identify and integrate first-party, second-party, and third-party data sources that contain the customer information to bring together. Which format is the company receiving data from partners, vendors, or suppliers? Which applications or databases are in place internally? What public data sources are connected or used?

Customer data sources needed to build a true Single Customer View can include:

- Social media

- Transactions

- Sales team interactions

- Firmographics

- Customer preferences

- Web and mobile browsing activities

- Demographics

- Sentiments

- Etc.

Make a list of data sources and create a strategy for the integration of these sources. Now cross-check this list against your legacy system to identify which of them can be integrated perfectly into the system and which would require external options.

Data Profiling: When integrating data from multiple sources at scale, data quality, like typos, irrelevancy, incompleteness, inaccuracy, and lack of standardization needs to be sorted. Profiling data after integration helps give an overview of quality issues such as punctuation in names, missing zip codes in addresses, etc. Fixing these issues gives better results when matching different representations of the same customer in the final step of the process.

Data Cleansing: Once quality issues are identified, it’s time to scrub it clean and standardize it to get the best results. The cleansing step can be very time-consuming and requires considerable attention to detail. For faster, accurate results, investing in data cleansing software is a good choice.

Data Matching: This is the most important part of the process where data is matched to identify and remove duplicates, compare data, identify patterns, and enable greater standards. For best data-matching results, data must be standardized to minimize false negatives. Ideally, a good data quality solution software will also allow for visual data matching where the user can define their match conditions.

Data Cleansing: Once the data is cleansed, deduped, and standardized, it’s time for the final step in the process: Choosing a master record. After all, the purpose is to build a Single Customer View that contains the cleanest, most complete record for every customer. Now that you’ve matched all of your data sources that contain customer data, it’s time to bring all of that information together. DataMatch Enterprise, the data quality software of Data Ladder allows for a Merge & the Survivorship step where the user can merge data into a single, comprehensive record.

Obtaining a single customer view will not only help the business identify opportunities but will also assist them in upgrading from legacy systems without disrupting the customer experience or the core operations of the business.

It must be noted once again that digital transformation is not about uprooting existing systems – it’s about how well you optimize business processes to meet customer demands while keeping in mind budget and operational restraints. With the experience we’ve had with hundreds of clients over the years, we know for certain that the most successful transformations always start with data. New systems and processes are a later investment.

Case Study: Maxeda DIY Group

Company Profile

Maxeda DIY Group is a renowned Dutch retail group with 376 stores and nearly 7,000 employees across Belgium and the Netherlands. This company is a classic example of a large enterprise operating on multiple enterprise apps, trying to make sense of data streaming in from dozens of locations and sources. While the company’s brick-and-mortar stores are doing exceptionally well, they plan to increase digital sales through their website. For this purpose, they needed clean data that they could use in a CRM and a new PIM.

Business Situation

Maxeda’s primary purpose for going digital was to keep customers loyal. They also wanted to acquire new customers and increase their customer base by focusing on personalized and relevant marketing. To do this, they needed data that could give them insights into user behavior both online and offline as well as information that could be used to create a more personalized communication channel with their customers. With digital transformation, Maxeda wanted to achieve customer loyalty and increase business value.

Data Challenge

Like many large enterprises, Maxeda had lots of legacy system issues that were affecting their digital transformation plan. Their data was split not just between different systems, but also between different countries. Additional challenges included non-unified methods of collecting and storing data. All this disparity eventually led to numerous data challenges such as the lack of unified systems, unorganized data, and old and dirty data that needed cleaning and standardizing. These challenges were significant roadblocks to Maxeda’s digital plans. Before they could move into a new CRM or PIM or implement a marketing plan, they had to ensure optimal data quality.

DataMatch Enterprise Was Used to Provide a Single Customer View

Maxeda used DataMatch Enterprise to clean, profile, identify, and remove duplicates of customer profiles, addresses, DOBs, and emails. There were 5 data sources of 3 different brands spread across 2 countries, which were brought together to generate a single customer view.

Once this view was created, it would be stored in the new CRM and Maxeda could achieve their marketing and personalized communication goals.

Benefits

It took just 2 months and 3 people to deliver Maxeda the results they needed. The manual method would have taken at least 10 IT experts over the course of 6 months to sort out data.

For the first time in its history, it took just 2 months to obtain a first-ever single-customer view stored in a single source in a single location!

The company had more accurate data and was able to accomplish complicated data goals in a short time span.

Conclusion

Aging systems and infrastructure is bogging down processes and hurting company revenues. But it’s not just about a slow system – it’s the data in that system that has not been brought up to par with modern digital needs. So while organizations aim for augmented or virtual reality, blockchain, cloud computation, data lakes, and many other digital initiatives, they will find it hard to succeed unless they don’t find a way to unleash their data.

Essentially then, the true essence of transformation is not systems, but data, which is the soul of a system.

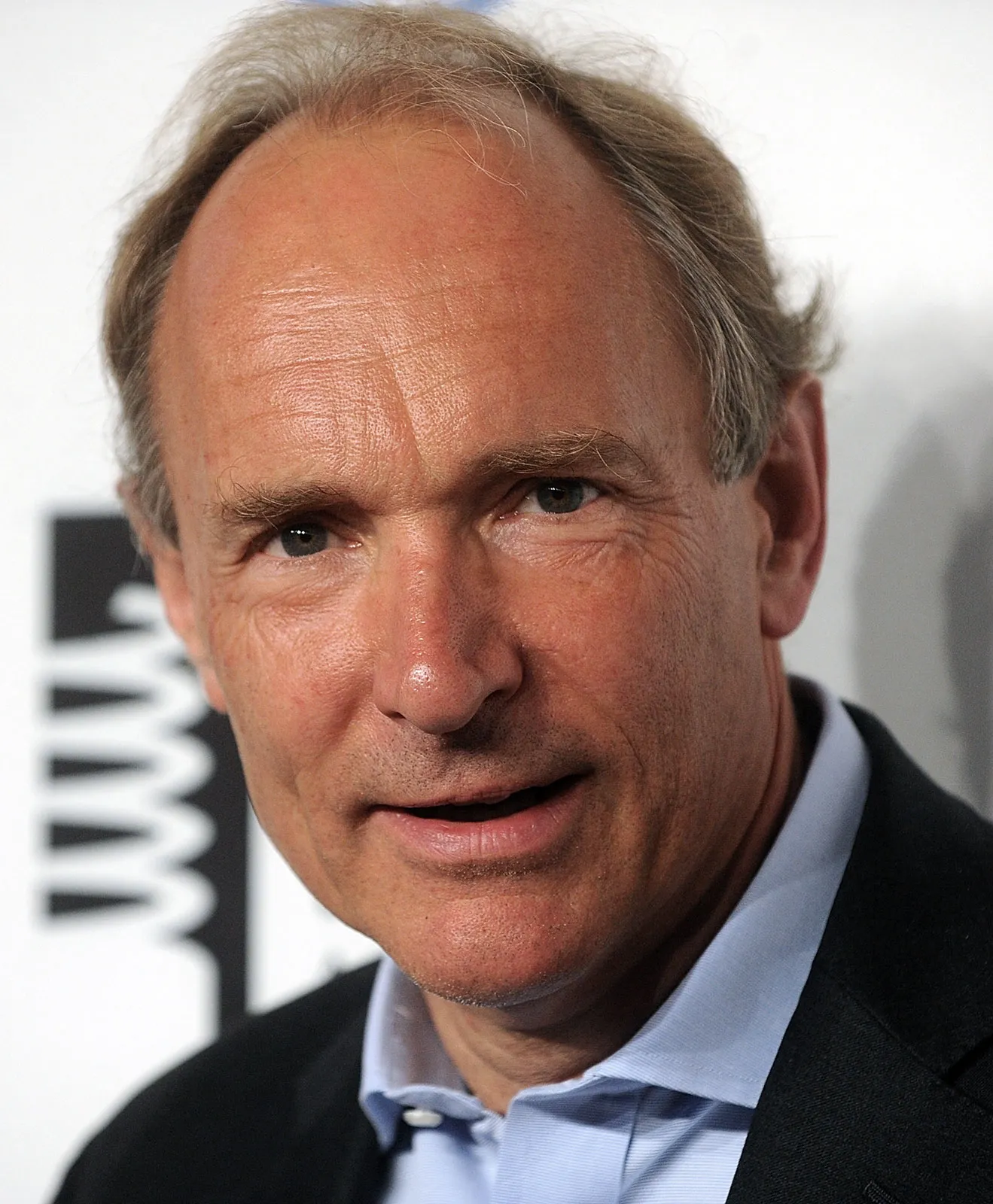

“Data is a precious thing and will last longer than the systems themselves.”

– Tim Berners-LEE | Inventor of the World Wide Web

Image taken from: https://www.britannica.com/biography/Tim-Berners-Lee