Data standardization software

Trusted By

Trusted By

Definition

What is data standardization?

Data standardization is the process of transforming data into a standardized format. It is achieved by performing data cleaning and standardization activities that yield a consistent and usable view across data coming from multiple disparate sources.

Enterprises on average use 50+ applications that have different rules and formats for data entry and storage. In addition to that, human errors and mistakes cause inconsistent punctuation and capitalization, invalid data entries, obscure or multiple variations of acronyms, etc. Organizations need to identify and resolve such inconsistencies by implementing data standardization techniques to ensure reliable data quality.

Process

How does data standardization work?

Combine and profile data

Bring data together at one place and build quick data summary report to highlight missing, incomplete, or invalid values present and identify potential data cleansing opportunities.

Parse and merge columns

Run data fields against a dictionary of words to identify sub-data elements (such as Street Name and Street Number for Address), and merge columns to follow custom-created formats.

Pattern recognition and validation

Recognize hidden patterns in your data columns, run validation checks, and transform invalid information so that all values follow the standardized and acceptable pattern.

Remove and replace characters

Remove and replace leading and trailing spaces, specific letters or numbers, non-printable characters, and more.

Transform letter cases

Transform cases of letters in strings to ensure a consistent and standardized view across all data records.

Use Wordsmith tool

Fetch the most repetitive words occurring in a data field, and decide to flag, replace, or delete certain words to achieve standardization, or prepare data for matching and deduplication.

Solution

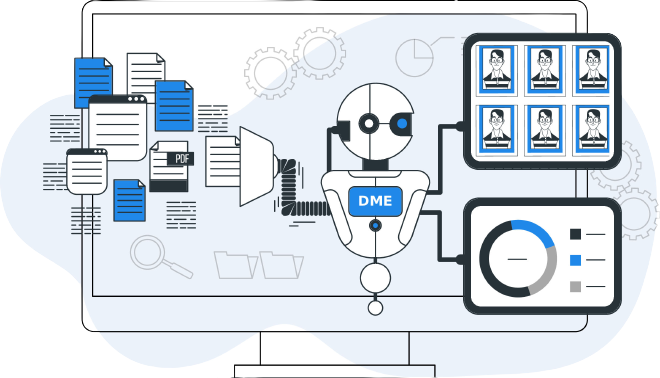

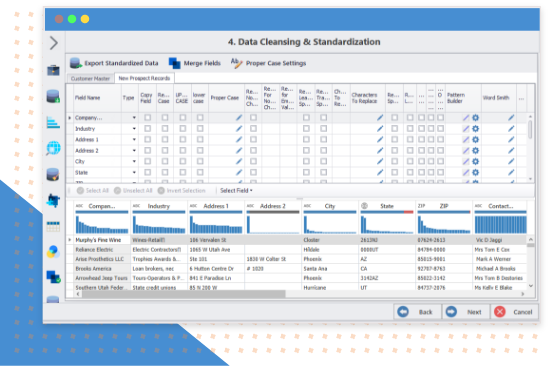

Let Data Ladder handle your data standardization

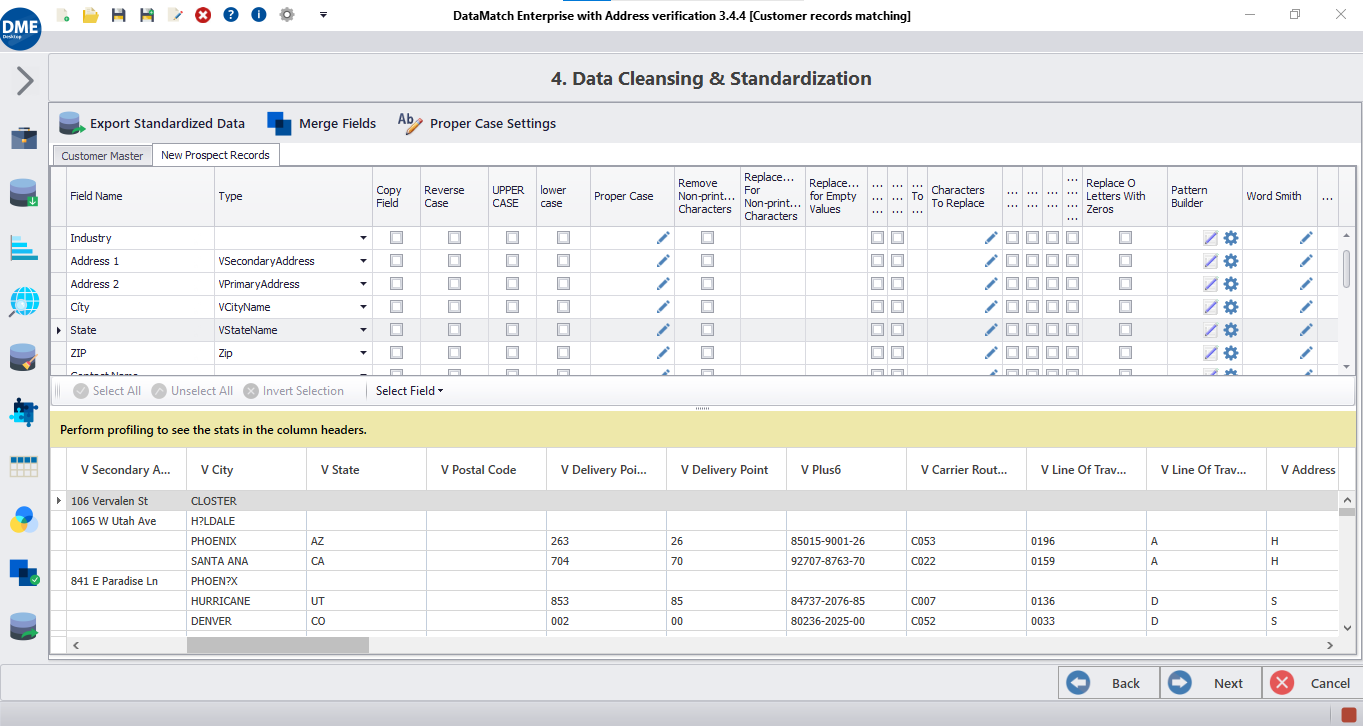

See DataMatch Enterprise at work

DataMatch Enterprise is a highly visual and intuitive data standardization tool that has a suite of features for inspecting, reconciling, and removing data errors at scale in an intuitive and affordable manner.

DME offers a plethora of features that make your data standardization process easier, quicker, and smarter. Its Pattern Builder comes with an in-built pattern library, as well as a visual drag and drop regex designer for creating custom patterns. Moreover, DME also offers extensive centralization of all your data cleaning activities, so that the same activities can be performed for old, new, and upcoming records, without putting in any additional work and effort.

Business benefits

How can data standardization benefit you?

Data masking for compliance

Transform data values using patterns, and mask or hide any sensitive or personally identifiable information to ensure data compliance.

Identify and Remove Duplicates

Uniformity in formats and patterns facilitates matching algorithms’ accuracy in finding exact, fuzzy, phonetic matches as well as duplicate records.

Greater Marketing ROI

Parse names and addresses or standardize company, email, and phone record formats in CRM to optimize email and direct mail campaigns.

Improve Workforce Productivity

Automate data standardization across millions of records to save sales, data and the IT team hundreds of hours in validation and oversight.

Better Decision-Making

Implementing company-wide data rules allows managers to make more informed decisions due to error and duplicate-free data.

Minimize Costs

Consistent and error-free data allows avoidance of potential revenue losses because of CRM data decay, invoice overpayments, and non-compliance penalties.

Let’s compare

How accurate is our solution?

In-house implementations have a 10% chance of losing in-house personnel, so over 5 years, half of the in-house implementations lose the core member who ran and understood the matching program.

Detailed tests were completed on 15 different product comparisons with university, government, and private companies (80K to 8M records), and these results were found: (Note: this includes the effect of false positives)

| Features of the solution | Data Ladder | IBM Quality Stage | SAS Dataflux | In-House Solutions | Comments |

|---|---|---|---|---|---|

| Match Accuracy (Between 40K to 8M record samples) | 96% | 91% | 84% | 65-85% | Multi-threaded, in-memory, no-SQL processing to optimize for speed and accuracy. Speed is important, because the more match iterations you can run, the more accurate your results will be. |

| Software Speed | Very Fast | Fast | Fast | Slow | A metric for ease of use. Here speed indicates time to first result, not necessary full cleansing. |

| Time to First Result | 15 Minutes | 2 Months+ | 2 Months+ | 3 Months+ | |

| Purchasing/Licensing Costing | 80 to 95% Below Competition | $370K+ | $220K+ | $250K+ | Includes base license costs. |

Frequently asked questions

Got more questions? Check this out

ready? let's go

Try now or get a demo with an expert!

"*" indicates required fields