Data scrubbing software

Trusted By

Trusted By

Definition

What is data scrubbing?

Data scrubbing, also called data cleansing, is the process of identifying inconsistencies, inaccuracies, incompleteness, and other messy data and then scrubbing it to get clean and standardized data across the enterprise, especially for downstream analytics applications which support business processes and decision-making.

Data scrubbing software achieves this by first profiling data, applying standardization techniques, and then matching entities across organization-wide systems or within a dataset for enrichment and deduplication purposes.

Process

How does data scrubbing work?

Data integration

Connect to data sources and load data from various sources, such as local files, relational database servers, CRMs, or other web applications.

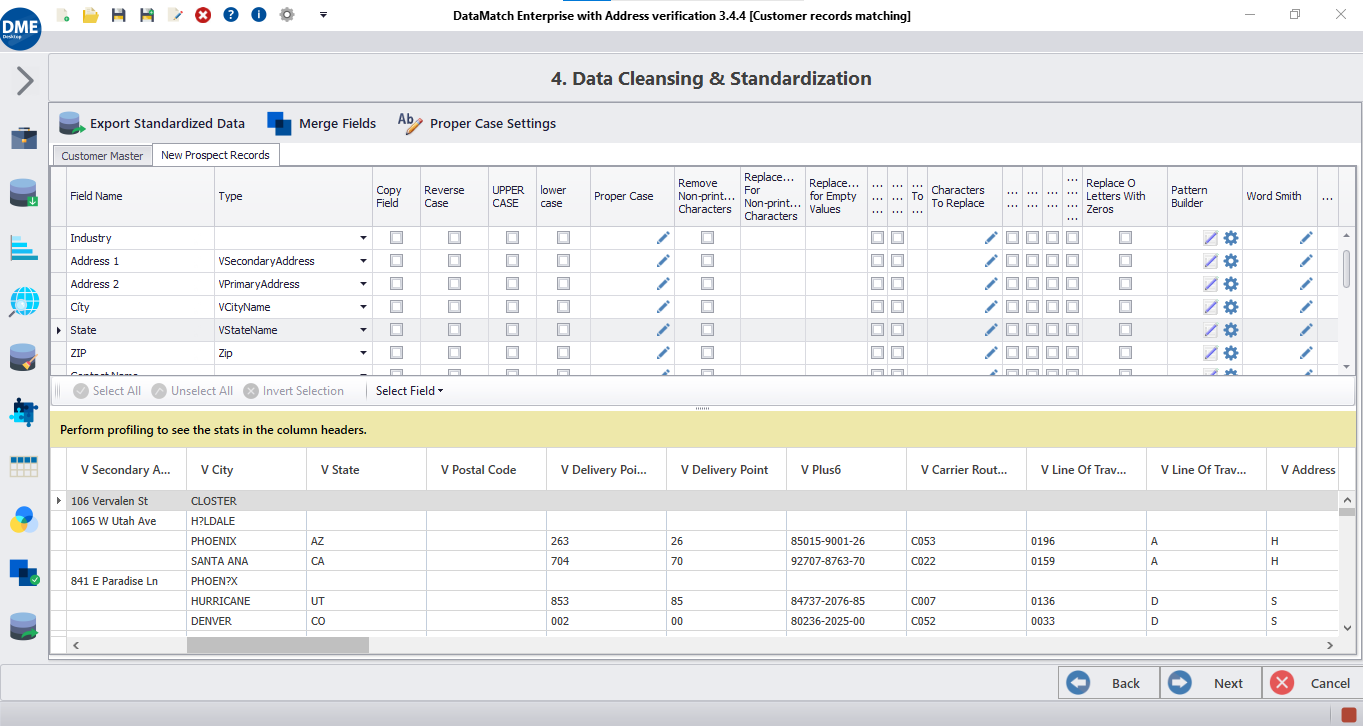

Data cleansing

Perform data cleansing activities to remove statistical and structural anomalies from data values, such as removing leading and trailing spaces, replacing null values, fixing punctuation errors, and more.

Use Wordsmith tool

Fetch the most repetitive words occurring in a data field, and decide to flag, replace, or delete certain words to achieve standardization, or prepare data for matching and deduplication.

Data profiling

Run profiling and validity checks to assess data quality, build current data profile reports, and identify potential data cleaning opportunities.

Pattern recognition and validation

Recognize hidden patterns in your data columns, run validation checks, and transform invalid information so that all values follow the valid pattern.

Duplicate detection

Identify duplicates present in your data records by running suitable data matching algorithms and detecting fuzzy, numeric, exact, or phonetic variations of the same data.

Solution

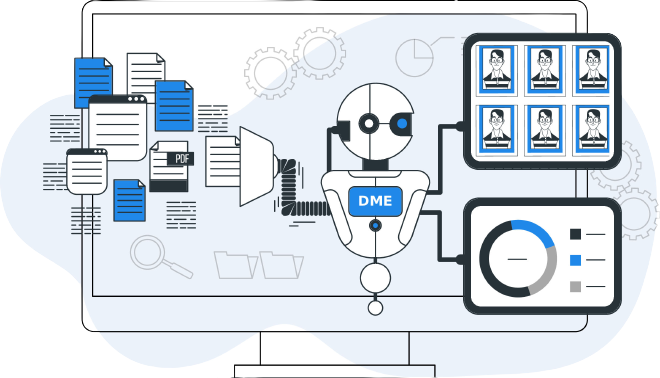

Let Data Ladder handle your data scrubbing process

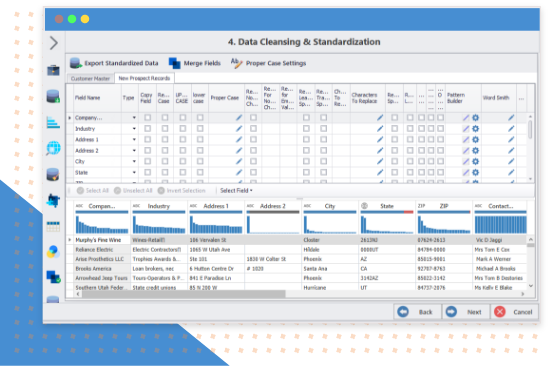

See DataMatch Enterprise at work

DataMatch Enterprise is a highly visual and intuitive data scrubbing software that has the suite of features to inspect, reconcile, and remove data errors at scale in an intuitive and affordable manner.

DataMatch leverages a plethora of industry-standard and proprietary algorithms to detect phonetic, fuzzy, mis-keyed, and abbreviated variations. The suite allows you to build scalable configurations for data standardization, deduplication, record linkage, enhancement, and enrichment across datasets from multiple and disparate sources, such as Excel, text files, SQL and Hadoop-based repositories, and APIs.

Business benefits

How can data scrubbing benefit you?

Reconcile duplicate entries

Identify and remove duplicate company accounts and customer names to avoid processing multiple invoices and duplicate marketing campaigns.

Ensure regulatory compliance

Scrub data errors to meet various federal and international regulations including KYC, AML, OFAC, and GDPR.

Define Data Standards and Rules

Enforce an enterprise-wide data quality framework with data rules, file naming conventions, and formats for operational efficiency.

Enhance customer targeting

Clean contact name, address, email, and phone records to drive higher customer acquisition and retention goals, increasing sales.

Prep Data for Actionable Insights

Resolve data anomalies including varied formats to prepare data for gaining accurate analytical insights for decision-making.

Improve employee productivity

Overcome data decay problems to save staff considerable person-hours spent on verifying contact address, email, and phone data.

Let’s compare

How accurate is our solution?

In-house implementations have a 10% chance of losing in-house personnel, so over 5 years, half of the in-house implementations lose the core member who ran and understood the matching program.

Detailed tests were completed on 15 different product comparisons with university, government, and private companies (80K to 8M records), and these results were found: (Note: this includes the effect of false positives)

| Features of the solution | Data Ladder | IBM Quality Stage | SAS Dataflux | In-House Solutions | Comments |

|---|---|---|---|---|---|

| Match Accuracy (Between 40K to 8M record samples) | 96% | 91% | 84% | 65-85% | Multi-threaded, in-memory, no-SQL processing to optimize for speed and accuracy. Speed is important, because the more match iterations you can run, the more accurate your results will be. |

| Software Speed | Very Fast | Fast | Fast | Slow | A metric for ease of use. Here speed indicates time to first result, not necessary full cleansing. |

| Time to First Result | 15 Minutes | 2 Months+ | 2 Months+ | 3 Months+ | |

| Purchasing/Licensing Costing | 80 to 95% Below Competition | $370K+ | $220K+ | $250K+ | Includes base license costs. |

Frequently asked questions

Got more questions? Check this out

- Data scrubbing process can be planned in five phases:

- Define and plan: Identify the data that is important in the day-to-day process of your operation.

- Assess: Understand what needs to be cleaned up, what information is missing, and what can be deleted.

- Execute: It is time to run the cleansing process. Create workflows to standardize and cleanse the flow of data to make it easier to automate the process.

- Review: Audit and correct data that cannot be automatically corrected, such as phone numbers or emails.

- Manage and monitor: Consistent evaluation and monitoring of data is important to ensure reliable data quality.

ready? let's go

Try now or get a demo with an expert!

"*" indicates required fields