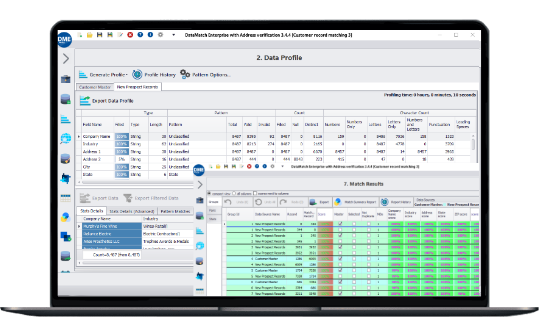

Accurate matching without friction

Enhance the quality of data spread across disparate sources by uncovering missed or overlooked matches using proprietary and established matching algorithms.

Why choose

Data Ladder

- High match accuracy

- Real-time processing

- User-friendly UI

- Address verification

- Hands-on support

- ZIP+4 geocoding

Features

We take care of your complete DQM lifecycle

Import

Connect and integrate data from multiple disparate sources

Profiling

Automate data quality checks and get instant data profile reports

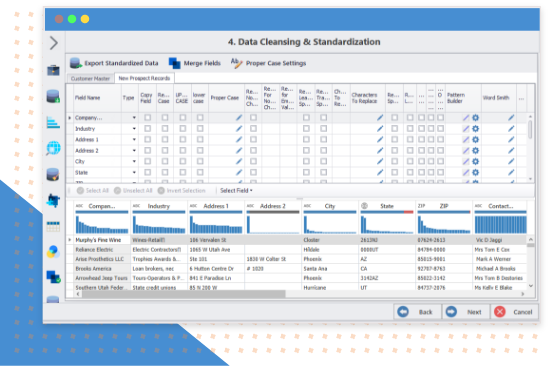

Cleansing

Standardize & transform datasets through various operations

Matching

Execute industry-grade data match algorithms on datasets

Deduplication

Eliminate duplicate values and records to preserve uniqueness

Merge & purge

Configure merge and survivorship rules to get the most out of data

USE CASES

A codeless solution that helps you to achieve

Link records across the enterprise

Resolve and reconcile entities

Match using fuzzy logic

Match and classify product data

Standardize address data

CUSTOMER STORIES

Still unsure? See why others prefer Data Ladder

DataMatch Enterprise™ was much easier to use than the other solutions we looked at. Being able to automate data cleaning and matching has saved us hundreds of person-hours each year.

We obtained 24% higher match rate using DataMatch Enterprise™ versus our standard vendor.

We liked the ability of the product to categorize the data in the way that we need it, and its versatility in doing that.

Let’s compare

How accurate is our solution?

In-house implementations have a 10% chance of losing in-house personnel, so over 5 years, half of the in-house implementations lose the core member who ran and understood the matching program.

Detailed tests were completed on 15 different product comparisons with university, government, and private companies (80K to 8M records), and these results were found: (Note: this includes the effect of false positives)

| Features of the solution | Data Ladder | IBM Quality Stage | SAS Dataflux | In-House Solutions | Comments |

|---|---|---|---|---|---|

| Match Accuracy (Between 40K to 8M record samples) | 96% | 91% | 84% | 65-85% | Multi-threaded, in-memory, no-SQL processing to optimize for speed and accuracy. Speed is important, because the more match iterations you can run, the more accurate your results will be. |

| Software Speed | Very Fast | Fast | Fast | Slow | A metric for ease of use. Here speed indicates time to first result, not necessary full cleansing. |

| Time to First Result | 15 Minutes | 2 Months+ | 2 Months+ | 3 Months+ | |

| Purchasing/Licensing Costing | 80 to 95% Below Competition | $370K+ | $220K+ | $250K+ | Includes base license costs. |

INDUSTRIES

Doesn’t matter where you’re from

Professional services

Ensure a holistic data strategy for your mission-critical projects including clear alignment between your data and business goals

Implementation services

Seek assistance in implementing Data Ladder software solutions from set up to execution for your data quality program.

Tailored programs

Get a customized data quality program that is tailored to your business’s specific goals and challenges to define the scope and strategy required.

Training and certification

Learn the skills needed to apply Data Ladder solutions in both simple and complex scenarios via dedicated team or 1-to-1 product training.

SERVICES

Want expert advice on data quality?

Professional services

Implementation services

Training and certification

Tailored programs

Want to know more?

Check out our resources

Merging Data from Multiple Sources – Challenges and Solutions

Oops! We could not locate your form.

Address standardization guide: What, why, and how?

Inaccurate and incomplete address data can cause your mail deliveries to be returned. In fact, the US postal service handled 6.5 billion pieces of UAA

What is data integrity and how can you maintain it?

While surveying 2,190 global senior executives, only 35% claimed that they trust their organization’s data and analytics. As data usage surges across various business functions,

Address standardization guide: What, why, and how?

Inaccurate and incomplete address data can cause your mail deliveries to be returned. In fact, the US postal service handled 6.5 billion pieces of UAA

What is data integrity and how can you maintain it?

While surveying 2,190 global senior executives, only 35% claimed that they trust their organization’s data and analytics. As data usage surges across various business functions,

Guide to data survivorship: How to build the golden record?

92% of organizations claim that their data sources are full of duplicate records. To make things worse, valuable information is present in every duplicate that

ready? let's go

Try now or get a demo with an expert!

"*" indicates required fields