In 2003, the Social Security Administration (SSA) and the Department of Transportation (DOT) launched a joint investigation into the possible misuse of Social Security Numbers by airline pilots. The two departments compared their databases and found more than 3000 pilots who were receiving social security and disability benefits.

Without data matching, the investigation wouldn’t have been successful. But, comparing two different datasets to identify matches comes with its own set of challenges.

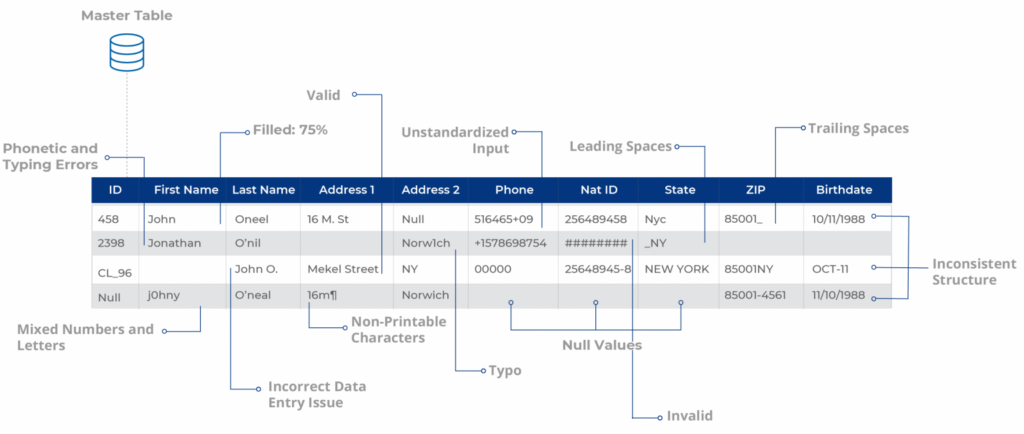

What if one pilot was listed as Johnathan Doe in the SSA database and John Doe in the DOT database? What if there were typos in their records? What if some of the information was intentionally or unintentionally withheld? Exact matching algorithms would fail to find duplicate records in all these scenarios.

This case, though two decades old, shows how fuzzy matching – a more sophisticated approach to data matching – can help create a single source of truth.

In this blog, we will take an in-depth look at fuzzy matching. We will cover:

- What is fuzzy matching?

- Why do businesses need fuzzy matching?

- How is fuzzy matching used in different industries?

- 20 common fuzzy matching techniques

- Pros and cons of fuzzy matching

- Improving fuzzy matching algorithm to minimize false positives and negatives

- Reducing false positives and negatives through data profiling

- Fuzzy matching scripts vs fuzzy matching software: Which is better?

- How to run fuzzy matching in DataMatch Enterprise

What is Fuzzy Matching?

Fuzzy matching is a more nuanced approach to data matching. Rather than flagging records as a ‘match’ or ‘non-match’ based on exact match algorithms, fuzzy matching identifies the likelihood that two records are a true match based on whether they agree or disagree on multiple identifiers.

The identifiers or parameters are defined by the users. They define parameters and assign them weightage. Some of the parameters may include:

- Name Variations – Including nicknames, initials, and different spellings.

- Addresses – Accounting for differences in formatting, abbreviations, or misspellings.

- Dates – Handling variations in date formats or minor inaccuracies.

- Email Addresses – Recognizing different domains or slight misspellings.

- Phone Numbers – Considering different formats or country codes.

- Identifiers – Such as social security numbers or customer IDs with slight variations or typos.

If the parameters are too broad, there is an increased chance of false positives, and if the criteria are too narrow, there will be false negatives.

- False positive – These are pairs that are identified by your algorithm or fuzzy matching software of choice as a match, but upon manual review, you will find that your approach identified a false positive.

- False negative – These are pairs missed by the system and have no match score due to narrow match criteria or data errors.

In both cases, the results are less than optimal, and that’s why it is important to have the right parameters in place with the right weightage assigned to them.

Consider the strings “Kent” and “10th”. While there is clearly no match here, popular fuzzy matching algorithms still rate these two strings nearly 50% similar, based as character count and phonetic match.

Why Do Businesses Need Fuzzy Matching?

Along with human error, data duplication is the biggest data quality issue faced by most organizations.

60% of organizations face data duplication issues. And, it’s not easy to catch duplicate records. The majority of duplicates are non-exact matches and therefore usually remain undetected. Fuzzy matching software helps you make those connections automatically using sophisticated proprietary matching logic, regardless of spelling errors, unstandardized data, or incomplete information.

But it’s not just about data deduplication. From a strategic perspective, fuzzy matching comes into play when you’re conducting record linkage or entity resolution. We touched upon this briefly in the previous section too; the fuzzy matching approach is invaluable when creating a Single Source of Truth for business analytics or building a foundation for Master Data Management (MDM), helping organizations integrate data from dozens of different sources across the enterprise while ensuring accuracy and minimizing manual review.

Here are some ways that fuzzy matching is used to improve the bottom-line:

- Realize a single customer view – Fuzzy matching merges fragmented customer records to create a unified view, enabling better customer service and targeted marketing.

- Work with clean data you can trust – It identifies and merges duplicate records, ensuring data accuracy and reliability for informed decision-making.

- Prepare data for business intelligence – By cleansing and consolidating data, fuzzy matching enhances the quality of datasets used in business intelligence tools, leading to more accurate insights and analyses.

- Enhance the accuracy of your data for operational efficiency – It reduces errors and inconsistencies in operational data, streamlining processes and improving overall efficiency in business operations.

- Enrich data for deeper insights – Fuzzy matching combines disparate data sources, enriching datasets and enabling deeper, more comprehensive analysis.

- Ensure better compliance – It helps maintain accurate and consistent records, essential for meeting regulatory compliance and avoiding legal issues.

- Refine customer segmentation – By accurately merging customer records, fuzzy matching improves the precision of customer segmentation, allowing for more effective marketing strategies.

- Improve fraud prevention – It detects subtle variations in fraudulent entries, enhancing the ability to identify and prevent fraud effectively.

Bonus read: See how a major healthcare provider was able to save hundreds of man-hours annually through fuzzy matching.

How is Fuzzy Matching Used in Different Industries?

Data duplication is a massive problem across industries, and that’s why fuzzy data matching is used in sectors ranging from healthcare to media and entertainment.

Healthcare

Healthcare providers use fuzzy matching to link patient records across various healthcare systems. This helps in maintaining comprehensive medical histories even with slight variations in patient data.

Fuzzy matching also helps identify and merge duplicate patient records caused by typos or inconsistent data entry.

Finance and Insurance

Financial institutions use fuzzy matching to identify potential fraud by matching slightly different versions of names or account details. This helps detect and prevent fraudulent activities.

These institutions also use fuzzy matching for customer data management. Fuzzy matching software helps compare customer information across different systems, avoiding issues with account management due to inconsistent data.

Education

Educational institutions use fuzzy matching to merge student records with different name or address variations. Besides student records management, these institutions also use fuzzy matching to track alumni by matching records with slight differences. This helps in maintaining up-to-date contact information for fundraising and networking.

Government

Government agencies link public records such as tax records, social security information, and voting records using fuzzy matching. They also use fuzzy matching software for fraud prevention and detection. The software detects fraudulent activities by identifying individuals attempting to manipulate records with minor variations in their personal information.

Sales and Marketing

Companies use fuzzy matching to consolidate customer data from various sources, creating accurate and complete customer profiles crucial for targeted marketing campaigns. They also use it to identify and merge duplicate leads in CRM systems, improving sales process efficiency and effectiveness.

Retail

Retailers manage product catalogs by identifying and merging duplicate product listings with slight name or description variations. They also use matching software to make sure customer service representatives have consistent and accurate customer information, even with minor data discrepancies.

Telecommunications

Telecommunication companies use fuzzy matching to merge customer records from different systems, ensuring accurate billing and customer service. They identify potential fraud by matching customer information across various accounts, even with slight discrepancies in data.

E-commerce

Ecommerce companies use fuzzy matching to enhance product recommendation engines by recognizing similar product names and descriptions. This helps them provide more accurate recommendations to customers. They also use it to aggregate customer reviews that mention the same product using different spellings or terms.

Legal Services

Law firms use fuzzy matching to identify and link related documents, even if they contain slight variations in text. This aids in thorough and efficient document review processes. They also use fuzzy matching to merge client records with slight variations in name or address, ensuring a single, accurate record for each client.

Logistics and Transportation

Logistics companies use fuzzy matching to track shipments by matching data from different sources, even if there are minor inconsistencies in the details. Fuzzy matching also helps in identifying and merging supplier records with slight variations. This helps in maintaining accurate supplier information for procurement and logistics operations.

Hospitality and Travel

Hotels and travel companies use fuzzy matching to consolidate guest records with variations in names or booking details. This helps them maintain accurate records and offer personalized service. They also use fuzzy matching to enhance booking systems by recognizing and linking similar entries.

Real Estate

Real estate platforms use fuzzy matching to consolidate property listings with slight variations in address or property details. Fuzzy matching also helps refine data used in recommendation engines to match clients with properties based on their preferences.

Media and Entertainment

Media companies use fuzzy matching to aggregate content from various sources, even with slight variations in titles or descriptions. This helps them maintain comprehensive media libraries.

Bonus read: Read about these eight benefits of data matching that will help you grow your business.

20 Common Fuzzy Matching Techniques

Now you know what fuzzy matching is and the many different ways you can use it to grow your business. Question is, how do you about implementing fuzzy matching processes in your organization?

There are multiple fuzzy matching techniques that are used in different scenarios. Let’s look at these methods in detail.

1. Levenshtein Distance (or Edit Distance)

The Levenshtein Distance method measures the minimum number of single-character edits (insertions, deletions, or substitutions) required to change one string into another. It is commonly used to identify spelling mistakes or minor differences between strings. For example, the distance between “kitten” and “sitting” is 3 (replace ‘k’ with ‘s’, replace ‘e’ with ‘i’, and add ‘g’).

This is commonly used in spell checkers, autocorrect features, and DNA sequence analysis to identify and correct errors or find the closest match to a given string.

2. Damerau-Levenshtein Distance

The Damerau-Levenshtein Distance method is an extension of Levenshtein Distance that also considers transpositions (swapping of two adjacent characters) as a single edit operation, which is useful for identifying typographical errors. For example, the distance between “ca” and “ac” is 1, due to the transposition of ‘c’ and ‘a’.

This is often used in text processing and error correction systems where typographical errors, such as transpositions, are common.

3. Jaro-Winkler Distance

This is a string comparison algorithm that measures the similarity between two strings, giving more favorable ratings to strings that match from the beginning. It is often used for comparing short strings such as names. For example, it is particularly useful in name matching, like comparing “Martha” and “Marhta”.

This is used for fuzzy name matching in databases, deduplication of contact lists, and record linkage systems.

4. Keyboard Distance

This technique measures the similarity between two strings based on the physical distance between characters on a keyboard, accounting for common typographical errors due to finger proximity. For example, the distance between “qwerty” and “qwdrty” is lower than the distance between “qwerty” and “asdfgh” due to the proximity of the keys.

This is implemented in spell checkers and typo correction tools that consider common typing errors based on the keyboard layout.

5. Kullback-Leibler Distance

This technique quantifies how one probability distribution diverges from a second, expected probability distribution. It is not commonly used in string matching but more in comparing distributions. For example, it is used to compare the distribution of words in different documents.

Mainly used in information theory and statistics to compare probability distributions, not typically for fuzzy string matching.

6. Jaccard Index

The Jaccard Index measures the similarity between two sets by dividing the size of the intersection by the size of the union of the sets. In string matching, it compares the sets of character n-grams of the strings. For example, comparing the sets of bigrams (pairs of adjacent characters) in “night” and “nacht” would give a similarity score based on common bigrams.

This is applied in clustering algorithms, text analysis, and in measuring the similarity of sets, such as in plagiarism detection.

7. Metaphone 3

This is a phonetic algorithm for indexing words by their sound when pronounced in English, designed to account for variations in spelling and pronunciation. Metaphone 3 is an updated version that improves accuracy. For example, “Smith” and “Smyth” would be indexed similarly, reflecting their phonetic similarity.

This is used in phonetic matching systems to index words by their sound, helpful in applications like genealogy research and search engines.

8. Name Variant

This is a method that accounts for common variations in names, including nicknames, diminutives, and alternative spellings, to improve matching accuracy in databases. For example, “Bob”, “Robert”, and “Rob” could refer to the same person.

This is used in customer relationship management (CRM) systems to match records with common name variations.

9. Syllable Alignment

This is a technique that compares strings based on the alignment of their syllables, which can be particularly useful in matching names or other text with similar phonetic patterns. For example, Matching “Alexander” with “Aleksandr” by aligning the syllables phonetically.

This is used in applications where phonetic similarity is relevant, such as language learning apps or automated transcription services.

10. Acronym

This method involves matching strings by recognizing acronyms and their expanded forms, useful in contexts where abbreviations are commonly used. For example, matching “NASA” with “National Aeronautics and Space Administration”.

This is commonly used in document management systems, search engines, and databases where abbreviations are frequently used.

11. Soundex

Soundex is a phonetic algorithm that indexes words by their sound when pronounced in English, used primarily for matching names with similar sounds but different spellings. For example, the names “Robert” and “Rupert” both encode to “R163” using the Soundex algorithm, indicating they sound similar despite different spellings.

Genealogy and historical research databases often use Soundex to match similar-sounding names that have different spellings due to transcription errors or regional variations.

12. Double Metaphone

An improvement over the original Metaphone algorithm, Double Metaphone returns two phonetic encodings for each word to account for different possible pronunciations. For example, the names “Smith” and “Smyth” both encode to “SM0” using Double Metaphone, helping to match names with different spellings but similar pronunciations.

This algorithm is used in databases to account for different pronunciations and regional accents, providing more accurate name matching in diverse datasets.

13. Cosine Similarity

This technique measures the cosine of the angle between two non-zero vectors in a multidimensional space, often used for text matching by comparing term frequency vectors. For example, comparing the phrases “data science” and “science data” using cosine similarity on their term frequency vectors results in a high similarity score, despite the word order difference.

Cosine similarity is often used in text analysis and information retrieval to measure the similarity between documents or search queries and documents.

14. Term Frequency-Inverse Document Frequency (TF-IDF)

This is a statistical measure used to evaluate the importance of a word in a document relative to a collection of documents. It is useful in text mining and information retrieval. For example, in a collection of documents, the term “machine learning” might have a high TF-IDF score in a document specifically about AI, indicating its importance relative to other documents.

This method is used in fuzzy search algorithms and recommendation systems to identify and rank the importance of terms within documents, enhancing the relevance of search results and recommendations.

15. N-Gram Similarity

N-Gram similarity breaks down strings into contiguous sequences of n characters and compares the frequency of these n-grams in the strings to determine similarity. For example, comparing the bigrams (two-character sequences) in “night” (“ni”, “ig”, “gh”, “ht”) and “nacht” (“na”, “ac”, “ch”, “ht”) shows some overlap, indicating partial similarity.

This method is used in spell-checking and text correction systems to find and suggest corrections for misspelled words based on common character sequences.

16. Hamming Distance

Hamming Distance measures the number of positions at which the corresponding symbols in two strings of equal length are different, primarily used for error detection and correction. For example, the Hamming distance between “karolin” and “kathrin” is 3, as there are three positions where the characters differ (‘a’ vs. ‘t’, ‘r’ vs. ‘h’, ‘l’ vs. ‘r’).

This method is used in error detection and correction systems, such as detecting and correcting single-bit errors in data transmission.

17. Smith-Waterman Algorithm

A dynamic programming algorithm used for local sequence alignment, the Smith-Waterman algorithm identifies regions of similarity between two sequences. For example, aligning the sequences “ACACACTA” and “AGCACACA” using the Smith-Waterman algorithm identifies the optimal local alignment, highlighting regions of similarity.

This algorithm is widely used in bioinformatics for DNA and protein sequence alignment to find regions of similarity between biological sequences.

18. Monge-Elkan Method

This is a hybrid approach that combines several matching algorithms to compute a similarity score based on the best matches between components of composite names or terms. For example, when comparing the names “John Smith” and “Jon Smythe”, the Monge-Elkan method combines multiple algorithms (e.g., Jaro-Winkler for “John” vs. “Jon” and Levenshtein for “Smith” vs. “Smythe”) to compute an overall similarity score.

This method is used in complex name matching tasks where a combination of algorithms improves accuracy, such as in customer relationship management systems.

19. SoftTF-IDF

This method combines the traditional TF-IDF weighting scheme with a secondary similarity measure, often used in natural language processing for improved text matching accuracy. For example, in a collection of documents, “machine learning” and “ML” might be identified as related terms, with SoftTF-IDF adjusting the similarity score based on their contextual similarity.

This method is used in advanced natural language processing tasks.

20. Dice’s Coefficient

Dice’s coefficient measures the similarity between two sets by calculating the ratio of the intersection size to the sum of the sizes of the two sets. For example, comparing the sets of bigrams for “night” (“ni”, “ig”, “gh”, “ht”) and “nacht” (“na”, “ac”, “ch”, “ht”) using Dice’s coefficient results in a similarity score based on the shared bigrams.

This method is used in information retrieval and text analysis to measure the similarity between sets, such as documents or search queries.

Pros and Cons of Fuzzy Matching

Since fuzzy matching is based on probabilistic approach to identifying matches, it can offer a wide range of benefits such as:

- Higher matching accuracy – Fuzzy matching proves to be a far more accurate method of finding matching across two or more datasets. Unlike deterministic matching that determines matches on a 0 or 1 basis, fuzzy matching can detect variations that lie between 0 and 1 basis on a given matching threshold.

- Provides solutions to complex data – Fuzzy logic also enables users to find matches by linking records that consist of slight variations in the form of spelling, casing, and formatting errors, null values, etc., making it better-suited for real-world applications where typos, system errors, and other data errors can occur. This also includes dynamic data that become obsolete or must be updated constantly such as job title and email address.

- Easily configurable to effect false positives – When the number of false positives need to be lowered or increased to suit business requirements, users can easily adjust the matching threshold to manipulate the results or have more matches for manual inspection. This gives users added flexibility when tailoring fuzzy logic algorithms to specific matching requirements.

- Better suited to finding matches without a consistent unique identifier – Having unique identifier data, such as SSN or date of birth, is critical for finding matches across disparate data sources in the case of deterministic matching. However, using a statistical analysis approach, fuzzy matching can help find duplicates even without consistent identifier data.

Fuzzy matching is not without limitations. Some of these limitations include:

- Can incorrectly link different entities – Despite the configurability available in fuzzy matching, high false positives due to incorrect linkage of seemingly similar. But different entities can lead to more time spent on manually checking duplicates against unique identifiers.

- Difficult to scale across larger datasets – Fuzzy logic can be difficult to scale across millions of data points especially in the case of disparate data sources.

- Can require considerable testing for validation – The rules defined in the algorithms must be constantly refined and tested to ensure it is able to run matches with high accuracy.

Improving Fuzzy Matching Algorithms to Minimize False Positives and Negatives

To improve data quality and reliability, you have to balance the reduction of false positives and false negatives. By implementing a combination of weighted parameters, threshold tuning, continuous feedback loops, and multiple other methods, organizations can significantly improve the performance of their fuzzy matching systems.

How to Reduce False Positives

False positives occur when the algorithm incorrectly identifies two non-matching records as a match. To reduce false positives, use:

Weighted Parameters

Assign different weights to various attributes based on their importance and reliability. For example, in a customer database, assign higher weights to unique identifiers such as email addresses and phone numbers compared to less unique attributes like first names.

Threshold Tuning

Adjust the similarity threshold to a level that reduces the likelihood of false matches. Conduct experiments to find an optimal threshold where the number of false positives is minimized without significantly increasing false negatives. Regularly review and adjust thresholds based on the types of data and specific use cases to maintain a balance.

Multi-Step Matching

Use a hierarchical or multi-step approach to matching, where initial broad matches are refined through more stringent criteria. Start with a loose match on broader attributes and progressively apply tighter criteria to filter out false positives. This approach helps to ensure that only records that meet multiple criteria are considered matches.

Phonetic Matching

Use phonetic algorithms like Soundex or Metaphone to account for variations in spelling while ensuring phonetic similarity. Combine phonetic matching with other techniques to improve accuracy. Phonetic matching helps in reducing false positives by focusing on how names sound rather than just how they are spelled.

Contextual Analysis

Incorporate contextual information into the matching process. Use additional contextual data, such as geographic location or transaction history, to confirm matches. Contextual analysis adds another layer of verification, reducing the chances of false positives.

How to Reduce False Negatives

False negatives occur when the algorithm fails to identify two matching records as a match. To reduce false negatives, use:

Advanced Similarity Metrics

Utilize advanced similarity metrics like Jaro-Winkler or Damerau-Levenshtein that consider more types of variations. Implement these algorithms to handle common data entry errors, such as transpositions and typographical mistakes.

Advanced metrics improve the algorithm’s ability to recognize true matches despite minor discrepancies.

N-Gram Analysis

Use n-gram analysis to break down strings into smaller components for comparison. Compare n-grams (e.g., bigrams or trigrams) to identify similarities between records that might not match exactly. N-gram analysis is particularly effective for text data where small variations in word order or spelling occur.

Machine Learning Integration

Train machine learning (ML) models to learn and predict matches based on historical data. You can train ML models on labeled datasets to identify patterns and improve matching accuracy over time. Machine learning can adapt to new patterns and improve matching accuracy by learning from past matches and mismatches.

Hybrid Approaches

Combine multiple matching techniques to leverage their strengths and mitigate their weaknesses. Use a combination of phonetic, syntactic, and semantic matching techniques to improve overall accuracy. Using hybrid approaches will balance precision and recall, reducing both false positives and false negatives.

Continuous Feedback Loop

Implement a system for continuous feedback and manual review. Allow users to review and correct matches and use this feedback to improve the algorithm. Continuous feedback helps in refining the algorithm, making it more robust against false negatives over time.

Reducing False Positives and Negatives Through Data Profiling

The most effective method to minimize both false positives and negatives is to profile and clean the data sources separately before you conduct matching. Leading data matching solution providers typically bundle a data profiler that quickly provides enough metadata to construct a cogent profile analysis of data quality, as in missing values, lack of standardization, any other discrepancies in your data. By profiling your data, you can quickly quantify the scope and depth of the primary project, whether it’s Master Data Management, matching, cleansing, deduplication, or standardization.

Once you’ve profiled your data, you will know exactly which business rules to apply to clean and standardize your data most efficiently. You will also be able to quickly recognize and fill missing values, perhaps by purchasing 3rd party data.

Cleaner, more complete data reduces false positives and negatives significantly by increasing match accuracy because your data is now standardized. The fuzzy matching algorithms you use, the matching criteria you define, the weight you assign to different parameters, the way you combine different algorithms and assign priority. These are all important factors in minimizing false positives and negatives too. But none of these are going to help much if you haven’t profiled and cleaned your data first. See how DataMatch Enterprise has helped 4,000+ customers in over 40 countries clean, deduplicate, and link their data efficiently.

Fuzzy Matching Scripts vs. Fuzzy Matching Software: Which is Better?

When it comes to fuzzy matching, using scripts vs software is always a big debate for most organizations. Let’s look at both options in detail.

Fuzzy Matching Scripts

Fuzzy logic can easily be applied from manual coding scripts that are available in various programming languages and applications. Some of these include:

· Python: Python libraries such as FuzzyWuzzy can be used to run string matching in an easy and intuitive method. Using the Python Record Linkage Tookit, users can run several indexing methods including sorted neighborhood and blocking and identify duplicates using FuzzyWuzzy. Although Python is easy to use, it can be slower to run matches than other methods.

Source: AylaKhan

· Java: Java includes several string similarity algorithms such as the java-string-similarity package that consists of algorithms such as Levenshtein, Jaccard Index, and Jaro-Wrinkler. Alternatively, the python algorithm FuzzyWuzzy can be utilized within Java to run matches. Here is an example below:

Source: GitHub

· Excel: The Fuzzy Look-up add-in can be utilized to run fuzzy matching between two datasets. The add-in has a simple interface including the option to select the output columns as wells as number of matches and similarity threshold. However, functionality can also give high false positives as it may not properly identify duplicates. An example of this is ‘ATT CORP’ and ‘AT&T Inc.’

Source: Mr.Excel.com

Fuzzy Matching Software

On the other hand, fuzzy matching software is equipped with one or several fuzzy logic algorithms, along with exact and phonetic matching. To identify and match records across millions of data points from multiple and disparate data sources including relational databases, web applications, and CRMs.

Fuzzy matching tools come with prebuilt data quality functions such as data profiling and data cleansing and standardization transformations. To efficiently refine and improve the accuracy of matches between two or more datasets.

Unlike matching scripts, such tools are far easier to deploy and run matches owing to a point-and-click interface.

Which is Better?

Choosing either of the two approaches comes down to the following factors:

Time

Matching scripts have the benefit of being easy to deploy at users’ convenience. However, the constant refinement and testing needed to ensure its efficiency. Especially across hundreds and thousands of records, can involve weeks if not months of work. In scenarios where duplicates and matches have to be found more quickly to meet tight project deadlines. A fuzzy matching tool proves to be far more reliable and convenient in running matches across very large datasets within a days or a few hours’ worth of time.

Cost

Manual coding scripts are inexpensive to use in comparison with matching tools provided that the number of records is small. For datasets comprising of millions or billions of records, however, the cost of using scripts can far outweigh those of matching tools considering the time and resources used to cater to the

Scalability

Fuzzy logic scripts tend to work better for a few thousand records. Where the variations in data are not too many otherwise the rules can fall apart and require more refinement, making it difficult to scale.

A fuzzy matching tool whereas comes equipped with the capacity to run matches against millions of data points within a few hours as well as batch and real-time automation capabilities to minimize repetitive tasks and man-hours.

Complexity of Data

Users may want to find matches or duplicates across a few thousand records. In contrast, federal agencies, public institutions, and companies often have non-homogenous datasets from multiple sources – Excel, CSV, relational databases, legacy mainframe data, and Hadoop-based repositories. For this, a dedicated matching tool can be more adept in ingesting all relevant sources, profile all known data quality issues and remove them using out-of-the-box cleansing transformations.

In the case of manual coding scripts, on the other hand, users have to write multiple complex fuzzy logic rules to account for the disparity in data and its anomalies – making it highly tedious and time-intensive.

The definitive buyer’s guide to data quality tools

Download this guide to find out which factors you should consider while choosing a data quality solution for your specific business use case.

DownloadFuzzy Matching Made Easy, Fast, and Laser-Focused on Driving Business Value

Traditionally, fuzzy matching has been considered a complex, arcane art, where project costs are typically in the hundreds of thousands of dollars, taking months, if not years, to deliver tangible ROI. And even then, security, scalability, and accuracy concerns remain. That is no longer the case with modern data quality software. Based on decades of research and 4,000+ deployments across more than 40 countries, DataMatch Enterprise is a highly visual data cleansing application specifically designed to resolve data quality issues. The platform leverages multiple proprietary and standard algorithms to identify phonetic, fuzzy, miskeyed, abbreviated, and domain-specific variations.

Build scalable configurations for deduplication & record linkage, suppression, enhancement, extraction, and standardization of business and customer data and create a Single Source of Truth to maximize the impact of your data across the enterprise.

How to Run It in DataMatch Enterprise

Running fuzzy matching in DataMatch Enterprise is a simple, step-by-step process comprising of the following:

- Data Import

- Data Profiling

- Data Cleansing and Standardization

- Match Configuration

- Match Definitions and

- Match Results

Firstly, we import the datasets we will use to find matches and use the data preview option to glance through the records. In our example, these are ‘Customer Master’ and ‘New Prospect Records’ as shown below.

Secondly, we move on to the Data Profile module to identify all kinds of statistical data anomalies, errors, and potential problem areas that would need to be fixed or refined before moving on to any matching.

As shown below, the New Prospect Records dataset is profiled in terms of valid and invalid records, null values, distinct, numbers only, letters only, leading spaces, punctuation errors, and much more.

Once we have profiled, we proceed to the data cleansing and standardization module where we fix casing errors, remove trailing and leading spaces, replace zeros with Os and vice versa and parse fields like name and address into multiple smaller increments.

After refining our data, we select the type of match configuration we need for our matching activity: All, Between, Within, or None. For our example, we will select Between to find matches only across the two datasets.

In Match Definitions, we will select the match definition or match criteria and ‘Fuzzy’ (depending on our use-case) as set the match threshold level at ‘90’ and use ‘Exact’ match for fields City and State and then click on ‘Match’.

Based on our match definition, dataset, and extent of cleansing and standardization. We get 526 matches each with a corresponding match score from 100% and below. Should we need more false positives to inspect manually, users can easily go back and lower the threshold level.

For more information on how you can deploy fuzzy matching in DataMatch Enterprise for your business use-case, contact us today.

Getting Started with DataMatch Enterprise

Download this guide to find out the vast library of features that DME offers and how you can achieve optimal results and get the most out of your data with DataMatch Enterprise.

DownloadCompanies need the best-in-class tools to process this data and make sense out of it. This white paper will explore the challenges of matching. How different types of matching algorithms work, and how best-in-class software uses these algorithms to achieve data matching goals.