Last Updated on December 11, 2025

When AI systems deliver inaccurate or inequitable results, many people immediately assume that something went wrong in the algorithms. Rarely do we look upstream – to the data pipelines or the preparation processes.

And almost never do we ask: Was the data even matched correctly in the first place?

In reality, data matching is one of the most underestimated risks behind AI bias and failure.

Mismatched, duplicated, or incorrectly merged records silently sabotage even the most sophisticated AI systems. They distort training data, misrepresent individuals, and embed biases that are nearly impossible to trace downstream. Yet this critical process often flies under the radar of AI ethics conversations.

If your organization is serious about responsible AI, it’s time to look upstream — to how your data is being joined, linked, and deduplicated. Because if your data foundation is flawed, your AI outcomes will be too — no matter how advanced your technology is or how fair, transparent, or explainable the model appears to be.

Ethical AI Begins with Ethical Data Matching

When people talk about ethical AI, the focus usually lands on model behavior: fairness, explainability, auditability. All these elements are important – but they’re only part of the picture.

Before an algorithm makes a decision, it consumes data. In AI workflows, data is used to:

- Train machine learning models

- Fuel recommender systems

- Identify individuals for targeting, intervention, or exclusion

- Create composite profiles that drive decision making

If the data used to build AI systems is:

- Merged incorrectly across systems

- Inflated with duplicates

- Shaped by poor linkage logic

…then bias gets baked in the system, and no amount of model tuning will fix it.

This is where data matching enters the ethics conversation.

Inaccurate joins distort reality — and when AI trains on those distortions, people pay the price. The way you connect, deduplicate, and unify data records sets the foundation for everything AI touches.

The Risks of Poor Data Matching: How Matching Errors Derail Responsible AI

Matching is often treated as a technical pre-processing step or a back-office data hygiene task. But it’s a strategic mistake.

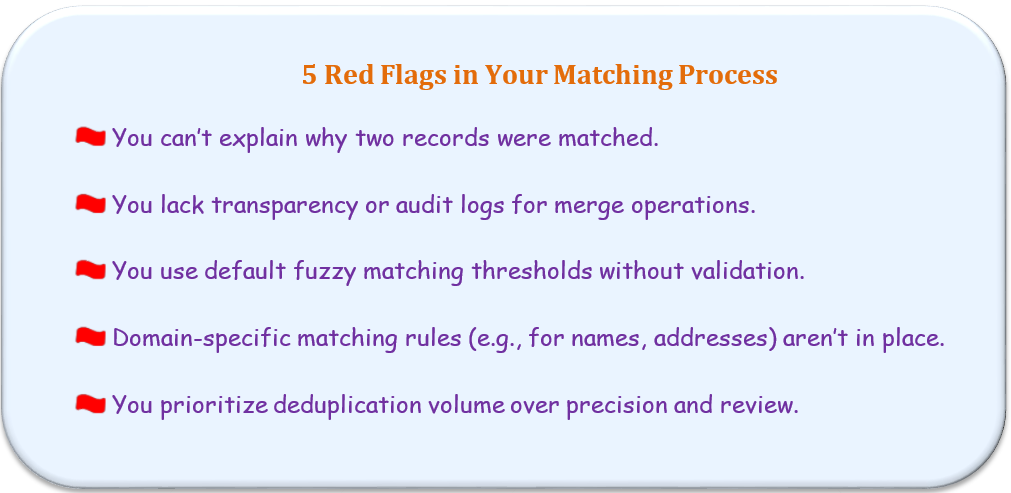

No AI model can overcome flawed inputs. Even if your models are carefully built and validated, bad input data can undermine them. And matching errors are a key vector for silent, systemic bias and inaccuracies. Here’s how they can creep in:

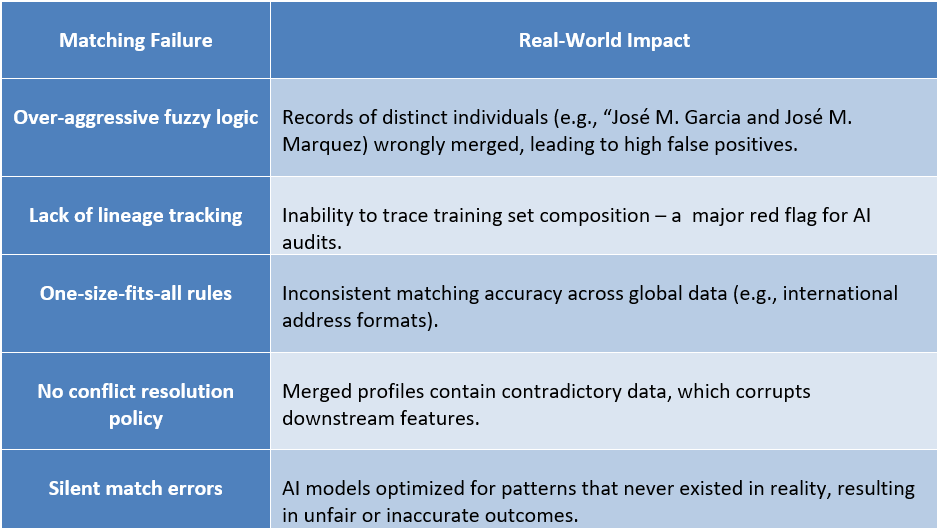

1. Over-aggressive fuzzy matching

Fuzzy logic is helpful, but dangerous without contextual safeguards, especially when it involves personal data or any other sensitive information. Using fuzzy matching blindly can create high false positives. What looks like a match on paper may be a conflation of two entirely different individuals, introducing silent errors that ripple across downstream systems.

2. Lack of lineage

If you can’t trace where your unified records came from or how they were created, you can’t audit your pipeline, prove compliance, or fix any issues that may emerge down the line.

3. Over-merging based on superficial similarity

Merging entities based on surface-level matches without checking supporting fields can corrupt entity resolution. This is especially common with international names or inconsistent address formats.

4. No survivorship logic

When two systems have conflicting data, who wins? Without rules, you’re introducing inconsistency and potential bias. Ethical matching lets you define survivorship rules intentionally.

When data matching goes unchecked, there’s a risk of models learning from bad data – which often results in wrong, unfair, unexplainable, or even harmful outcomes, especially in use cases like:

- AI-driven risk assessments

- Recommendation engines

- Insurance underwriting

- Loan approvals

- Public health analytics

- Fraud detection

Artificial intelligence models trained on poorly matched records create polluted outcomes. And because these errors are rarely visible in AI workflows, they often remain overlooked, which makes them especially dangerous, especially in regulated environments like government, healthcare, or finance where data protection and privacy are highly critical.

What Ethical Data Matching Looks Like

In the world of AI, good matching is more than just getting rid of duplicates. It’s about creating AI systems that are fair, explainable, and safe. That requires a shift from ad hoc scripts and black-box tools to intentional, governed practices. Here’s what that looks like in practice:

1. Explainability

Every match should have a traceable rationale. What fields contributed? Was the score above threshold? Would another reviewer agree? This not only allows you to defend each decision but also forms the basis for retraining models.

2. Human-in-the-loop review

Automated matching is efficient – but blind automation is dangerous. Responsible systems enable review of borderline (uncertain) or high-impact matches, so errors don’t get baked into critical processes.

3. Contextual rules

Matching logic must reflect the domain. What counts as a match in a B2B customer database isn’t the same as in a public health registry.

For example, matching “Jon Smythe” and “John Smith” might be acceptable in some use cases, but a critical error in others. Similarly, the patterns for names, addresses, product codes, or contact numbers can vary by industry, region, and language.

Ethical systems account for context when matching records. They let you define rules based on your data’s semantics and industry norms.

4. Confidence scoring

Responsible tools provide match confidence scores, so teams aren’t left guessing whether a match is strong enough. This helps avoid silent errors in AI inputs and allow organizations to define thresholds based on their tolerance for risk and error.

5. Transparent logs

Ethical matching leaves a trail, so if something goes wrong, you can trace it back to the source.

Every match, merge, override, or rejection is logged (recorded) and auditable (reviewable). This doesn’t just help proving compliance, but also in building trust.

Why You Can (Should) No Longer Ignore Responsible Data Matching

Data matching might not get the same spotlight as large language models or generative AI. But it’s one of the most consequential – and overlooked – steps in building ethical and accountable AI systems.

Things seem to be changing now, though.

From the EU AI Act and NIST’s AI RMF to the FTC’s data-related investigations, agencies are beginning to demand accountability for how AI systems are trained, including where the data came from and how it was prepared.

Early adopters of ethical data matching won’t just avoid penalties; they’ll contribute to building systems that are:

- More resilient to bias

- Easier to audit and explain

- More trusted by stakeholders; both internal and external

The organizations that take responsible matching seriously today will be the ones best equipped to navigate AI risks tomorrow and build systems that are not just powerful, but principled and trusted.

Want to future-proof your AI strategy with responsible data matching?

Book a personalized demo with our expert to see how DataMatch Enterprise (DME) can help you do it.