Most companies now understand that new technologies and applications must be implemented in order to upscale business operations. But implementing a data migration of a legacy system into a new system presents a major data quality challenge. Unless the organization has been actively using solutions such as a list matching software or data cleansing solution; there are high chances that the data is flawed, corrupt and erroneous.

It is, therefore, essential for an organization to invest in data cleansing solutions before implementing any migration plans. The fundamental purpose is to derive usable data from years of outdated data – to make that possible, you have two essential choices: invest in data specialists or data quality solutions.

The question is, should you hire a team or should you use a software solution?

In this guide, we’ll help you see both sides of the coin so you can make a better judgment call. We’ll be covering important topics as:

- The Cost of Poor Data Quality

- Common Issues with Data and List Quality

- Approaches to Data Quality Issues

- Key Features of a List Matching Software

- Amec Foster Wheeler Case Study

Let’s get started.

The Cost of Poor Data Quality

Poor data quality refers to data that has duplicates, mismatched names, abbreviations, non-standardized data (NY vs NYC vs New York vs New York City), incomplete zip codes, email addresses etc.

The cost of poor data quality is staggering. $3.1 trillion is the estimated annual loss in the U.S alone caused by poor data.

Let’s take the example of Company A, a large-scale construction equipment supplier company with multiple data silos. Their 2020 objective is to move their legacy system into a new cloud system and streamline business processes.

They were aware of the challenges of their data quality – over the years, data was recorded by multiple departments via multiple tools. With no standardization or centralized data management system in place, the company was in for a significant data cleansing challenge.

The first step to any data cleansing process lies in conducting an analysis of data lists and identifying key issues. The focus is on lists because data matching solutions work by matching lists of records against each other. The main goal is to remove duplicates, void, null or incomplete data to ensure the company has accurate data when moving into the new system.

Common Issues with Data List Quality

Database tables display records in the form of lists. Continuing the example of Company A, chances are there are repeated or duplicated lists, or lists with inaccurate, inconsistent information.

It’s quite obvious that without any set standards or system, sales reps have been updating their lists without focusing on the quality of information. Names may be abbreviated, billing information may have different standards, addresses may not have been updated are some of the most common issues with data lists.

Let’s take a look at each of these issues in detail.

List Duplication: This often happens when a customer’s data is recorded twice under a different email address or a name variant. It is also quite possible when the same customer goes by two different names (usually the case with the name change after marriage) and enters conflicting information in a form or in a piece of billing information. If the [name] token is used as a unique identifier in a database, then the information is recorded twice.

Data Inconsistency: This is a recurring problem with most databases and one that is extremely difficult to resolve. While human error is the cause of most data inconsistency, most of the time, it is the lack of data standardization that causes inconsistencies. Issues with name variations such as Cath vs Catherine or Carl vs Karl, issues with city name variation such as NYC vs NY are not human errors, rather, they are variations that modern databases have to deal with by implementing standardization.

Disparate Data: In databases, disparate data refers to unstructured data or data that is distinctly different in type, quality, and character. A good example of this could be airline data – where one customer is represented by multiple data points such as passport number, booking ID, customer ID, and customer name; all stored in multiple databases. The booking database may hold different data. The customer service ticketing system may hold different data. The customer support system may hold different data. If these databases do not share information collectively, there is a significant problem with data quality. All this disparity in data makes it difficult to generate a single consolidated list that may come in need if the airline wants to study their consumer behavior.

The larger and more complex your database, the higher the chances of it being corrupt or erroneous.

Approaches to Data Quality Issues

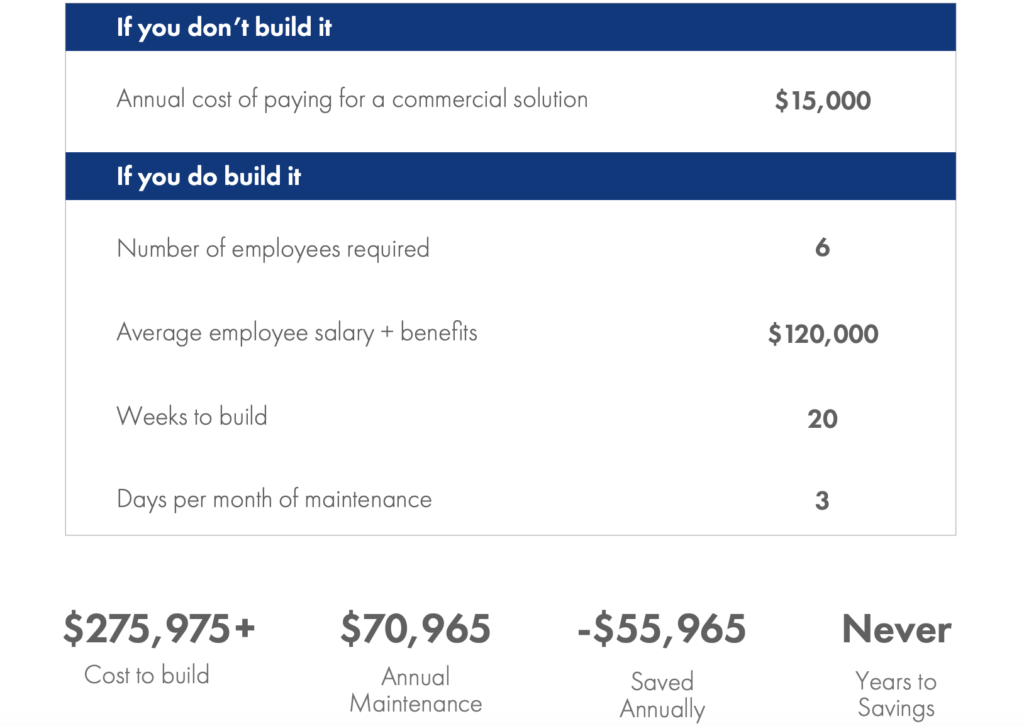

There are two approaches to resolving data quality issues – either hire a team or invest in a software solution. Most companies prefer to develop an in-house data cleansing solution by employing specialists or by tasking their IT team to do the needful; only to end up being disappointed by delays and the associated cost of investing in people, systems and resources.

In contrast, investing in a software gives more flexibility, does the job faster and costs significantly less. The only catch? You have to search, explore and discover a solution out of the multitude of options that best fits your business requirements.

1. The ‘Hire a Team to Do It’ Approach:

It’s a given that every organization large or small has a dedicated IT team. There is no rocket science to optimizing data quality, but, it’s the kind of work that IT teams at organizations hardly have the time or focus to work on.

The result? When data analysts or specialists are called in to make sense of data, they are presented with outdated, incomplete or incoherent lists.

You then need to hire additional team members to create algorithms to make sense of the data. Even then you will not get accurate, precise results.

In the midst of all this, you’re spending hundreds of thousands of dollars hiring new members, implementing new processes and wasting months if not years into ensuring everything is in order.

Here’s a cost breakdown.

2. The Software Approach

Most organizations have come to this realization that hiring a team to sort their database is an expensive and counter-productive approach.

The other option is to invest in software solutions of which there are several types. There are best-in-class solutions like IBM, SAS, Informatica, and Oracle that cater to enterprise-level data, however, you need trained specialists to operate each of these solutions.

Then there are top-tier solutions such as Talend, Attaccama, Informatica that have a range of products providing data engineering, cloud integration, data security and much more. These solutions are designed for large enterprise organizations that want a comprehensive data solution.

Finally, you have mid-tier, DIY list matching software that uses fuzzy logic methods (matching strings of similar patterns) to identify and remove duplicates. In the long run though, you need more than just fuzzy matching to clean your data.

Key Features of a List Matching Software

With so many choices, how do you determine which software solution works best for you?

To answer that, you will need to know what key features are required from a data cleansing or list matching software and how those features can help you accomplish various aspects of your data matching and cleansing goals.

Data Profiling

Data profiling is the process of examining the accuracy, completeness, and validity of your data. A good list matching software allows you to profile your data before migrating from a legacy system to a new system. During the profiling phase, your data will be sorted for blank or null values, anomalous patterns, and data duplication. For legacy systems with years of data and thousands of errors, data profiling is a necessity. It helps in identifying data quality issues at the source level saving you time at later stages.

Semantic Tagging

When data comes in from different sources, it’s often difficult to make sense of all the fields that contain identifiable information. For example, birthdates are often registered under the field of Date. There is no clarity on whether this is a birthdate or an event date. A semantic tag of, ‘birthdate’ is applied to the Date field which later helps in the identity resolution process.

Personal identification information could be first name, last name, email addresses, billing addresses, etc. The purpose of semantic tagging is to make sense of data and quicken the data cleansing process.

Data Cleansing

Once the fields have been tagged, the next process is the data normalization and cleansing part. This means if you’ve got fields that are not standardized, they are normalized. So for example, the address, 47 W. 13th St. NY, US is normalized to, ’47 W 13th STREET, New York, USA.

During the data cleansing process, spam data or incomplete data is tagged as Not Available, Null, Declined, to ensure bogus data gets sorted and cleansed early on.

Matching

Matching is the most important function of the identity resolution process. Almost every high-end data solution provides data matching as its core service. This is the process where the software compares records and derives connections. There are three main processes that are used to carry this out on enterprise-level data.

-

- Blocking: When performing a matching activity, millions of records need to be matched and compared to each other. So, if you have a data set that contains say a million records, you’ll have to compare 1 million x 1 million records. This is an extremely ineffective and slow process, not to say computationally prohibitive. To compare these records, a simple rule of blocking is used to divide up the set of records into smaller, ‘blocks’ that are matched against each other. The blocks are pairs of records that are more likely to be matches – for example, birth dates can be separated into different blocks such as BirthYear, BirthMonth and BirthDay. All three columns can be used at a time to process your first block matching.

-

- Pairwise Comparison and Scoring: This method compares data sets within a block. For example, you can compare a BirthDate block with a Name block to see if two of the blocks represent a match.

- Clustering: A necessary aspect of data matching, clustering produces faster match results by manipulating one or more identifier values in the data set and clustering them against the identifier values. For example, names ending in ‘Smith’ may be clustered into one group which is then further reviewed to see if there are any conflicting matches. Records in different clusters will not be compared together and clusters with a single record will not be used in matching.

Data Standardization

Once you’ve sorted out your data, the next step would be to clean your list. This is done by deleting duplicate entries, filtering out void, null or incomplete data and performing list scrubbing to ensure your data is squeaky clean.

At the end of the process, the data is validated, and final versions are communicated to various departments in the organization. It is here that you will need to implement standardization of data which means that all your data should be stored in a common format. Involved personnel or people dealing with data must be trained on standardization.

The purpose of collecting data is not quantity but quality. You don’t want a 100 email addresses – you want a 100 accurate, complete, usable email addresses. In the real world, there will be 28 addresses out of 100 addresses that are invalid or useless.

Data cleansing and standardization, therefore, ensure that you have data you can work with and data you can trust.

Additional Features of a Best-in-Class List Matching Software

Additionally, here are a few important features a great list matching software must have:

Fast: The purpose of using a list scrubbing software solution is to get you results as fast as possible. Organizations don’t have the privilege to wait months or years to obtain specific information – if they need the sales record for a new branch, they need it fast. The software can get this for you in a matter of minutes as compared to having a team that will spend hours if not days in using multiple queries to get the data you need instantly.

Accurate: Accuracy is a critical point of data quality management. Top-tier list matching software removes duplicate with precision, ensuring accuracy of data. In 15 independent studies, Data Ladder’s matching accuracy was measured as 96% across three data sets from 40K to 4 million – higher than IBM’s at 88% and SaS at 84%.

Complete tools: When the objective is data quality management, you need a complete set of tools and not just a standalone solution. The right tool makes it possible for you to profile, match, clean and standardize data.

Easy Integration: As a quick example, Data Ladder integrates with more than 150 data platforms. Be it Salesforce or Zoho, you can just plugin your database with Data Ladder.

Scalable: Measuring a few million records is easy. Measuring a few hundred million records is an altogether different technology that can only be handled by a list scrubbing software designed to be scalable. When you invest in a data quality solution, make sure it can assist you when your data scales.

Amec Foster Wheeler Case Study

Amec Foster Wheeler plc was a British multinational consultancy, engineering and project management company headquartered in London, United Kingdom until its acquisition by and merger into Wood Group in October 2017.

With the increasing demands of the environmental engineering industry, the company was in strong need of streamlining its business processes for the upcoming influx of projects and human resources tasks.

The company was in the process of migrating to a new finance and HR system and knew the quality of their data needed improvement before going to the next important step.

Using DataMatch™, Data Ladder’s data software, the company was able to manage its deduplication efforts. With the large task of migrating all of their existing financial and human resources information to a new system, they also plan to use DataMatch™ to clean and repopulate their systems.

The benefit? With best-in-class data cleansing and deduplication capabilities, combined with customized training by Data Ladder specialists, the client was not only able to keep their data accurate but was also able to maintain a high level of data quality needed to migrate into their new financial and HR systems.

You can download the case study to read the challenges, business situation and how our solutions helped the company achieve its desired business and data quality goals.

Conclusion

Data quality and list matching issues have been a struggle for enterprises since eons. In today’s world though, there are literally dozens of solutions that are available to help you clean data. That said, every business need is different, which requires a combination of different tools. You may want to use Data Ladder’s DataMatch™ to clean your data, but you may want Talend’s cloud migration services.

When it comes to data quality, there is no universal solution, however, this shouldn’t hold you back.

Do not let bad data affect your business growth.