While surveying 1900 data teams, more than 60% cited too many data sources and inconsistent data as the biggest data quality challenge they encounter. But when faced with the task of fixing data quality, data leaders often find themselves at a difficult spot as crucial decisions must be made. One of the most important decisions to make is: at what point of the data lifecycle should we test and fix data quality?

To answer this question, a few aspects must be considered. Since many companies regularly produce huge amounts of data and consistently use it throughout the organization, a reactive approach – where the data is cleaned after it is stored – may not be the best choice, since it impacts data reliability and availability. Deploying a central data quality firewall – where the data is tested and treated before it is stored in the database – could be a better option in such cases.

To know more about the differences between the two approaches, check out our latest blog: Batch processing versus real-time data quality validation.

In most reactive approaches, data stewards or other data quality team members use a software interface to test and fix data quality. But in a proactive approach, this task is usually handled through an API. In this blog, we will look at different aspects to consider while deploying a data quality firewall using such APIs. Let’s get started.

What is a data quality API?

Simply, an API (Application Programming Interface) means a software intermediary that allows applications to talk to one another. An API resides between two software applications and handles the requests/responses being transmitted. Usually, when you want to integrate or connect two systems, you do it with the help of an API.

Similarly, a data quality API means:

A software intermediary that serves requests/responses for various data quality functions.

Architectural implementation of a data quality API

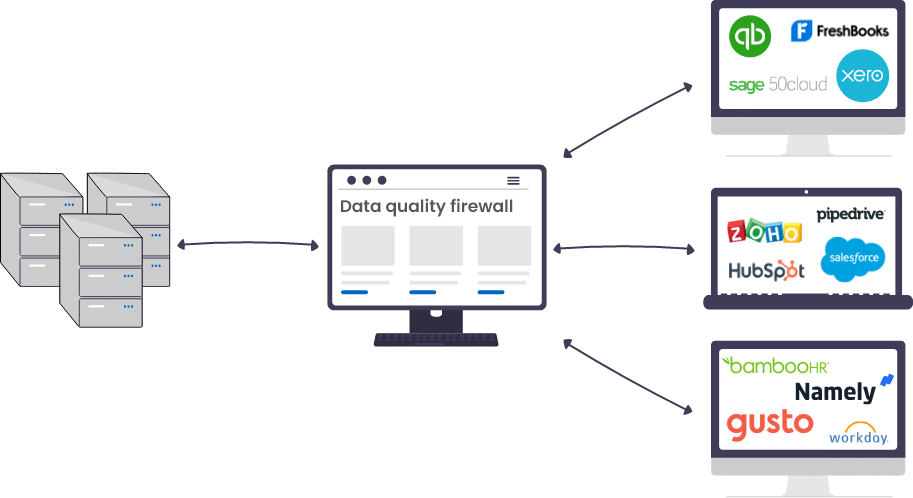

A data quality API is often referred to by different names. For example, data quality firewall, real-time data quality validation, central data quality engine, etc. Such names are given since the API functions between the application that captures data and the database that stores it.

Data quality APIs (just like any other API) are built on event-driven architecture. They are triggered when an event takes place. So, whenever new data comes through a connected application or existing data is updated, it routes through the API first (where data quality is verified), and then it’s directed to the source database. This makes the API act as a gateway between a data capturing application and a data storing source that ensures no data quality errors are migrated from the first end to the second one.

Example of real-time data quality validation for customer data

When you deploy a central data quality firewall, the incoming data is checked for data quality in near real-time. For example, when a change is made to any customer record or when a new customer record is created in any connected application, the update is first sent to the central data quality engine. Here, the change is verified against the configured data quality definition, such as ensuring required fields are not blank, the values follow standard format and pattern, a new customer record doesn’t possibly match an existing customer record, and so on.

If data quality errors are found, a list of transformation rules are executed to clean the data. In some cases, you may need a data quality steward to intervene and make decisions where data values are ambiguous and cannot be well-processed by configured algorithms. For example, there could be a 60% possibility that a new customer record is a duplicate, and someone may need to manually verify and resolve the issue. Once that is done, the cleaned, matched, and verified data record is then sent to a destination source, which could be a master dataset, data warehouse, business intelligence system, etc.

An example of such architecture is diagrammatically shown below:

Functions of a data quality API

A good data quality API is capable of executing different kinds of data quality functions on incoming data. Depending on the processes included in your data quality framework, you can subscribe to the required functions through the API. But since most organizations cannot afford to let any recurring or new data quality issues fall through the system, they mostly subscribe to as many data quality validation and fixing techniques the API offers.

The most common functions exposed by a data quality API include:

1. Connect to data sources

This is the primary and most beneficial functionality a data quality API offers. Organizations use multiple data generating applications, for example, website tracking tools, marketing automation tools, CRMs, etc. For this reason, a data quality API must be able to connect and talk to these different applications, as well as the destination database where the incoming data usually ends up.

2. Run data quality checks

Whenever an event occurs in a connected application (a new record is created or an existing one is updated), the API can assess and profile the incoming data for data quality errors. This is done by executing statistical algorithms that assess incoming data and validating whether it conforms to the data quality definition. Such checks include ensuring that:

- Required fields are not left empty,

- Fields follow the correct data type, pattern and format, and fall between the valid range,

- A new record is unique and is not a duplicate of an existing record,

- The new data is validated against custom business rules.

3. Fix data quality issues

A data quality API offers a number of functions that can fix the errors and issues discovered during data profiling. Such functions include (but are not limited to):

- Parsing aggregated fields into sub-components for creating more meaningful field values,

- Transforming data types, patterns, and formats wherever needed,

- Removing unnecessary spaces, characters, or words,

- Converting abbreviations into proper words,

- Overwriting or merging a new record with the duplicate one (in case a record for the same entity already exists).

4. Raise alerts for manual review

Sometimes, a data quality API is unable to fix certain data quality issues that are complex in nature. In such cases, alerts are raised to appropriate stakeholders (data stewards or data analysts) so that the errors can be manually reviewed and one of the suggested actions can be taken. For example, a new record is suspected to be a duplicate with a likelihood ratio of 65%. Such ambiguous situations require human intervention to resolve the problem and make the right decision.

5. Move data to destination source

Once the data is validated to follow the required data quality standard, the final function the API performs is moving it to the destination source. Sometimes this is simply a single database, while in other cases, there could be multiple output locations, such as business intelligence system, data warehouse, or other third-party applications, etc.

Benefits of a data quality API

Here are some benefits of deploying a central data quality firewall:

1. Maximize data reliability and availability

According to Seagate’s Rethink Data Report 2020, only 32% of enterprise data is put to work while the rest of 68% is lost due to data unavailability and unreliability.

One of the biggest benefits of real-time data quality validation is that it ensures reliable state of data at most times by validating and fixing data quality instantly after every update. Since a data quality API processes, cleans, and standardizes the data as soon as it enters the system, it is more likely to be available when a consumer queries the data to perform routine tasks.

2. Enhance business and operational efficiency

A recent survey shows that 24% of data teams use tools to find data quality issues, but they are typically left unresolved.

Most data quality tools have the capability to catch problems and raise alerts in case the data quality deteriorates below an acceptable threshold. But still, they leave out an important aspect: automating the execution of data quality processes (whether based on time or certain events) and resolving the issues automatically. A lack of this strategy forces human intervention – which means, someone has to trigger, oversee, and end data quality processes in the tool to fix these issues.

This is a big overhead that a data quality firewall easily resolves. Organizations want to invest in creating a central data quality engine that is capable of running advanced data quality techniques with minimal human intervention. This positively impacts the business’s operational efficiency and team productivity.

3. Build custom solutions

Nowadays, the definition of quality data is controlled by its organization and depends on specialized business rules. Data validation techniques implemented in data entry forms or executed through a year-old python script are not enough. Organizations are now looking to build their own custom data quality engine – something that is engineered for their specific needs. This is where a data quality API offers huge benefits; whether you want to design your own data quality framework, data management architecture, or custom data solutions.

4. Gain more control over data quality validation

The best part of an API is the personalization or customization capability it offers. This can allow you to have more control over how your processes work and the output they create. For example, you can define custom business rules for data quality validation, as well as configure thresholds and variables that are appropriate for your organizational datasets.

Another example of this is integrating a custom-built issue tracker portal into the system that sends alerts to data stewards whenever something needs immediate attention. Data stewards can use the portal to perform manual review and override decisions wherever necessary.

5. Implement effective data governance policies

Another benefit of deploying a central data quality firewall is to ensure effective implementation of data governance policies. The term data governance usually refers to a collection of roles, policies, workflows, standards, and metrics, that ensure efficient information usage and security, and enables a company to reach its business objectives.

Custom implementation of a data quality solution using an API can help create the required data roles and permissions, design workflows for verifying information updates, collaborate to merge multiple data assets, track who updated information and when, etc.

6. Smart search data sources

Since a data quality firewall connects to all main data sources of an organization, there are hidden benefits in this architecture, and one of them is smart searching data across all sources. This is beneficial when businesses want to intelligently query data from one or more databases without using advanced SQL scripts or programming languages. A data quality firewall can help do that since it houses complex and advanced data matching algorithms.

For example, when you search connected sources for records having the First Name as Elizabeth, the API executes logical business rules to query and match data – including fuzzy, phonetic, and domain-specific data matching techniques. This offers smart results in real-time where the records having a possible variation of the word Elizabeth as the First Name are also fetched and shown, such as Elisabeth, Alizabeth, Lisa, Beth, etc.

7. Reduce hassle of import/reimport/export

Another benefit of deploying a data quality API is that it reduces the hassle of exporting, importing, and reimporting data. This is something that must be done while using a standalone data quality tool to clean, match, and verify datasets. With an API, you can do all the cleaning, matching and address verification without ever leaving your source system. It lets your data quality tool talk directly with multiple data sources to find all the associated records. It can also make any changes to the data automatically, saving you time and money, and reducing the chances of errors creeping in.

8. Automatic appending to data warehouses

Organizations store and maintain tons of historical data records about customers, products, and vendors in data warehouses. Millions of such records are generated daily or weekly. But before new records can be moved to the core data warehouse, it must be tested for appropriate data quality standards, and in the case of duplicates, it must be matched to ensure that the upcoming record enriches the old one, and is not created again as a new record.

This is where a data quality API performs exceptionally well – processing large quantities of data in near real-time in the background, and pulling unique ID of an existing record, performing data matching to identify exact or fuzzy matches, and appending new data attributes to the existing record.

Conclusion

Implementing consistent, automated, and repeatable data quality measures can help your organization to attain and maintain quality of data across all datasets in real-time.

Data Ladder has served data quality solutions to its clients for over a decade now. DataMatch Enterprise is one of its leading data quality products – available as a standalone application as well as an integrable API – that allows you to use any data quality management function in your custom or existing application, and set it up for real-time data source connection, profiling, cleansing, matching, deduplication, and merge purge.

You can download the free trial today, or schedule a personalized session with our experts to understand how DME’s API capabilities can help you to build your own custom solution and get the most out of your data.